In the mid 1990’s my Australian company made the decision to purchase a Media 100 system. That remains the best business decision I ever made (and selling it to jump to Final Cut Pro 1 was the second best business decision). It also meant we were migrating from Amiga computers to Macs. Given that I already had a graphic designer on staff for titles, illustrations and animations, I decided to delight clients by having our designer create a full color slick for the (then) VHS deliverables. (Masters simply got descriptive labels.)

Until that point we’d only done black and white printing, and it’s easy to proof what you’re going to get on a B&W laser printer. Not so with color. Color output wasn’t as common then as it is now and we didn’t get the first Kinkos until very late in the 1990’s, so we really only had one choice for our runs of 2-3 covers for each job.

This became a serious problem when – while developing a food product for my parent’s company during the period I managed it (in addition to my own two companies) – we needed a very specific purple on mockup packaging we were presenting to food buyers at the national department store chains in Australia. Cadbury – Australia’s biggest chocolate company – have always used a specific purple in their packaging, and had just spent several million dollars on a campaign that heavily featured this purple. Since the new product was a chocolate variation on a traditional English Christmas Pudding, having the purple match was beyond important. And we got blue-purple, and red-purple: seemingly every color except the one we wanted.

The problem was, what we saw on the screen and what they printed were two entirely different images. Just like that, the print industry’s color management problems were now my color management problems. There’s nothing like first hand knowledge of the problem to appreciate the cure!

By early 1998 we were full on into publishing, with the release in February 98 of the Media 100 Editor’s Companion. By this time we had  learnt – although that particular output bureau never did – the joys and benefits of ColorSync. While the interior was done with xerography (i.e. photocopying) the color covers were done in Sydney by a direct-to-plate digital offset and we never ever had color accuracy problems with an output bureau that used ColorSync.

ColorSync was the solution the print industry needed to deal with color consistency and matching. The challenge is quite significant when you consider that each device treats color slightly differently: they have different “color profiles” in ColorSync Profiles. What ColorSync facilitates is a color accurate workflow, also referred to as a color managed workflow.

The challenge in print is to ensure that a scanned image reproduces with correct colors on an RGB display and prints consistently on a wide range of types of printers (and size of output) in CMYK color. On computers the images are additive (three light sources) while on paper they are subtractive. Â If you worked hard you couldn’t create a less consistent workflow.

And yet, for the last 15 years or so, this has not been a problem. Colors in Photoshop, Illustrator, InDesign, Quark, etc. are accurately reproduced in the final output despite the different color models, device variations, display variations, differing types of paper and even ink variations. This has been possible because of ColorSync, largely developed by Adobe and Apple but now supported on every platform and by all manufacturers of scanning or printing hardware.

What is ColorSync?

This I knew when I wrote recently on how ColorSync might be used as part of Final Cut Pro X’s color management, but from the comments it quickly became obvious that I was very uncertain about:

- How does ColorSync work with video files, and specifically

- How does Final Cut Pro X use ColorSync to accurately monitor color.

So I took it upon myself to start researching the topic. I very quickly discovered that, while there is a lot of information regarding ColorSync and printing workflows, there was really nothing about ColorSync and video short of an OS 9 era article on ColorSync and QuickTime, noting that we can add ColorSync profiles to QuickTime movies using Terran Interactive’s Media Cleaner Pro! (That really dates it. Neither company nor product still exist.)

I took the opportunity to ask my contacts at Apple some specific questions about how ColorSync works in Final Cut Pro X and then synthesized that with the best information I could about ColorSync in general to research what I found. What was also interesting is that, while ColorSync has been mentioned in the PR materials and demos, there has been no explanation as to how that facilitates accurate color. This is my attempt to redress that lack.

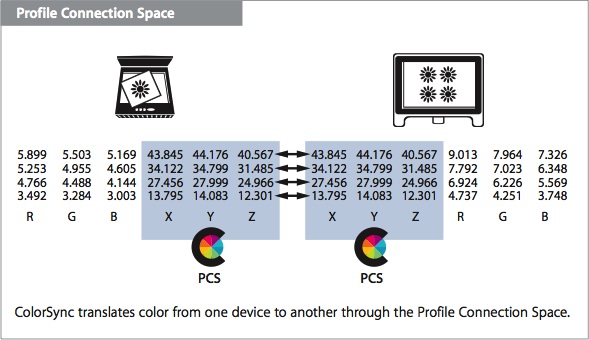

ColorSync is built on ICC profiles and the two  terms are interchangeable: an ICC profile managed workflow is a ColorSync managed workflow. Each device – scanner, printer, camera, monitor – has at least one ICC profile. In an oversimplified explanation the ICC profile tells ColorSync (or equivalent engine in Adobe’s applications) how this device’s color representation differs from the standard. In this case it’s the XYZ Profile Connection Space: a colorspace with a very wide gamut.

So, for a given scanner the ICC profile is used to create an accurate representation of the scanner’s result into the Public Connection Space (PCS from here on) and the display’s ICC profile tells ColorSync how to accurately display that PCS on that specific monitor for most accurate fidelity to the original image content data.

In simple terms each device tells ColorSync how that device’s files relate to the PCS either to convert to the PCS or convert from the PCS to a display or printer. This way, each display or printed copy is color accurate to the original file, despite variations in printers or displays.

Workflows that correctly follow this practice are known as Color Managed workflows. Â (Check and see if you’re reading this in a color managed browser.) From that page:

This is happening BECAUSE your color-managed browser is reading each file’s embedded profile and Converting or Mapping them to your monitor profile for a theoretical display of True Color.

In practice, while you may have to set up Photoshop or some other tools, for the most part, like the color management in Safari demonstrated from the link above, it just happens. You’ll find scanners and printers install ColorSync (ICC) profiles for all the functions – a scanner/printer will have a scan-to-PCS profile, and a PCS-to-printer profile – and OS X uses them. Â Earlier versions of the OS required some setup but recent (10.5 onward at least) have implemented ColorSync so it “just happens”. As long as each device has a profile, and files are tagged with the appropriate source ICC profile, color accuracy along the entire process is assured.

Color consistency in the video world

Meanwhile, it was easy to monitor video: use a calibrated video monitor. Connect output of your edit system to said calibrated monitor. If you were very particular about your video signal you connected Waveform and Vectorscope to the output as well, so you could really see what’s going on in the signal.

The accuracy of the color entirely depended on how good a monitor you put on the end of the chain. Now in a perfect world this would be a broadcast level monitor, calibrated at least once a year, that could be relied on. I confess, I never had one, even though I delivered a decent number of TV Commercials for national TV broadcast, and other content that went to air. The majority of my editing career, however, has been for non-broadcast purposes.

Even today, I support systems that do work for major studios and none of them have a broadcast grade monitor, let alone calibrate it. One system is finishing HD footage to a 10+ year old SD TV set of no particular brand. Another restoring historic footage works primarily with a decent (but domestic) Plasma display that’s distinctly off axis from the editing position.

I’ve set up movie edit stations that have monitored on a Cinema Display via an AJA SDI to DVI adapter (with CLUT); or to an LCD TV. In fact that device is doing in hardware exactly what ColorSync is doing in software. Now these movies were not graded on this system, just for editing so that’s probably fair. Another client working on a TV series  for Cable Networks, has only a broadcast monitor (JVC 24U) in the grading room; the other four bays all work with consumer LCD or Plasma.

Color fidelity required that the NLE made no unintentional changes to the signal coming in and ultimately the accuracy of color depended entirely on how accurately the specific display represented the colorspace it was attempting to represent. In SD that would normally be Rec. 601 and for HD normally Rec 709.

It’s up to the NLE software to convert colorspace from the different source profiles it encounters: not only Rec 601 and 709 in video files but sRGB or any of another dozen possible profiles for still images.

This type of monitoring – a conventional video output – allowed monitoring in the destination colorspace, but appears to be not part of Final Cut Pro X’s design, at least according to Matrox. So, how does Final Cut Pro X ensure color consistency? Of course, ColorSync.

ColorSync was implemented in QuickTime. Badly. Final Cut Pro 7 did not support it, instead working with it own color conversion/matching technology.

Final Cut Pro X and ColorSync

As I’ve discussed the important considerations for ColorSync are:

- The colorspace, which can be considered to be the equivalent of an ICC profile, must be known and accurate for the file (taking the place of the source device profiles in print workflows);

- The rendering engine must use ColorSync to convert from source to destination colorspace/profile; Â and

- The frames must be converted for the desired output, be it a Cinema Display, SD 601 or HD 709 result.

This is exactly what Final Cut Pro X does.

As files are ingested, Final Cut Pro X will read any ColorSync information if the files are tagged with information about their colorspace. If it’s a QuickTime file that contains an explicit tag for color information, that is respected by Final Cut Pro X, Motion 5 and Compressor 4.

If there’s not an explicit tag, then Final Cut Pro X infers the colorspace from known information. For example DV files automatically imply either an NTSC Rec 601 colorspace or a 1280 x 720 or 1920 x 1080 file will be tagged as being HD Rec 709 colorspace. These are quite reasonable assumptions and yet another use of Inferred metadata in the Final Cut Pro X world. Beyond those examples, Final Cut Pro X will simply make an intelligent guess based on whatever information is available. Fortunately, image size and aspect ratio are very strong clues to how the source color should be interpreted (the purpose of tagging it). 720 x 576 pixel files are almost certainly PAL SD files and so Rec 601 PAL is reasonably inferred.

Generally it’s older files that have no useful information and require inferring colorspace, but since there really are so few colorspace choices for video, compared with print where each device has its own characteristics, the source ColorSync information is likely to be highly accurate.

I’m sure someone will take me to task on the fact that Rec 601 doesn’t fully describe the color profile because there is no primaries defined, but Final Cut Pro X uses other information in the file to determine which of the well know primaries should be associated with the file.

So we have source color profile information associated with the files. Now it’s up to Final Cut Pro X to ensure that the rendering is accurate.

For Final Cut Pro X and Motion Apple created a shared render engine, touted as the “Linear Light Engine” at the NAB Supermeet Preview but that branding seems to have been dropped ahead of release. This shared render engine uses ColorSync to conform an image from one colorspace to another. ColorSync creates the steps that are required for a specific transform and then assembles them into a series of GPU operations into the GPU processing pipeline. Or in simpler terms, it manages the transforms so the source image content is accurately represented into the processing space.

There is no assumption about the input colorspace at render time. ColorSync profiles were derived or inferred on ingest or come from the image file. Most processing uses the Rec 709 primaries with a linear (1.0) gamma, ready for the final stage.

The third point above, is that the render engine has to accurately prepare that processing space for the appropriate output. If outputting to a Cinema Display, then the render engine conforms the processing space so that the visual appearance on the Cinema Display will look like the image should be viewed. (This works well with the recommended gamma and color temperature for the display: 2.2 and 65oo K respectively.) For the most accurate results you’ll want to create a ColorSync profile for the specific monitor rather than using the supplied “generic for this type of monitor” profile that ships. You can use the ColorSync Utility for that, or a tool that reads values off the screen to generate the profile.

If you’re working with an HD Rec 701 file but request an output for DVD, then the 701 frames (and other graphics from their colorspace) are converted and output so they are correct for the Rec 601 colorspace of a DVD.

So Final Cut Pro X determines the colorspace (ICC Profile) of the source on ingest; manages color using ColorSync through the rendering engine and then uses ColorSync to make sure that the representation on the display – whatever type of display – is also an accurate representation.

Meaning, there is no need for an external broadcast monitor in the classic sense. What you see on a monitor that has a ColorSync profile, is an accurate representation on that monitor of the source colors displayed on a Rec 709 monitor. And you don’t have to do a thing to get the goodness!

Not convinced? Well, coincidentally the Academy of Motion Picture Arts and Sciences’s “ACES or Academy Color Encoding Specification” initiative is planning something almost identical to ColorSync, but specific to the needs of motion picture post production, particularly with so much digital manipulation and inclusion of digital elements. (Look for the heading “ACES: Academy Color Encoding Specification”).

Beyond Rec 709

While the ACES initiative is largely about colorspaces used in digital cinema, it should be pointed out that a “broadcast video monitor” would be no help for colorspaces other than Rec 709 (for HD). So if you want to work with, DCI-P3, also SMPTE-431-2, for example in a traditional NLE you’d be stuck previewing in the wrong colorspace.

With a color managed/ColorSync workflow, if you have DCI-P3 media, and the appropriate source ColorSync/ICC Profile (or colorspace definition,) then ColorSync in Final Cut Pro X would present an accurate representation of that source on the computer monitor.

Mixing any of these colorspaces into any one, and then outputting the appropriate colorspace becomes no problem when using ColorSync.

Conclusion

While it’s a significant change from traditional workflows, the approach taken by Apple with Final Cut Pro X is a better fit for what happens in edit bays outside the big studios, where the problem with early Final Cut Pro users wasn’t that they weren’t monitoring on calibrated broadcast monitors but that they were monitoring only on the computer display. How many times did I, and the other Final Cut Pro 1 pioneers have to remind people that the computer display is not an accurate representation of the video!

Well, 2.2 gamma on OS X (since Snow Leopard) and ColorSync have made that advice not only null and void, but downright wrong. With a ColorSync workflow, the view on the computer monitor, particularly in full screen mode, will be more accurate than using a consumer television as a display, with the sole exception of interlaced video output. If you’re delivering for web, for LCD or plasma screen (i.e. all currently available displays) the display is progressive. Produce and edit accordingly.

I don’t think we have broadcast video output from Final Cut Pro X, because we have something better that accommodates todays colorspaces and will expand to accommodate whatever the future holds as well.

A disclaimer. I do not have the equipment available to test this: I don’t have a broadcast monitor nor a system that could output to it via SDI or even analog. (I could do DV via Firewire, but really….) I am confident that the solution that solved my inconsistent print color issues is robust enough, with the right software design, to manage my consistent video image needs.

The purpose of monitoring video on a broadcast monitor is to ensure that we have consistent color accuracy from a known reference. The value of a ColorSync workflow is that we ensure that we have consistent color accuracy wherever its shown. It’s a superior choice that avoids the limitations and expense of the previous “standard workflow.” Instead of having to build in a single colorspace on a monitor, Rec 601 or 709 style, with ColorSync all wide gamut monitors can be calibrated (ideally with a colorimeter and on that specific monitor) and used as reference monitors.

57 replies on “What is the secret to Final Cut Pro X’s color management?”

Thanks for the write up Philip. Having a color managed work for video that is similar to what print uses has been on my ‘want list’ for a long time. Not just to make it easier for content creators but also to make it easier for content consumers to see how things are supposed to look.

I’m still wondering if interlaced signals and video frame rates will be properly handled by FCP 10, the GFX card and the monitor.

I just updated the article with a reference to interlace. Interlace is the spawn of the devil, an artifact of poor bandwidth in the 1940’s and something that is now a real problem for displays that are all universally progressive. In the consumer market there are now very few interlaced displays, except 5+ year old SD TVs.

Broadcasters are not obliged to broadcast interlace (they have progressive choices) and interlace is a total pain to encode: 1080i will always look worse on a destination screen than 720P even with the reduced resolution of 720.

what about fast moving motion philip

A very, very old LCD might struggle to keep up but nothing less than 5 year old should have a problem.

I am in total agreement about interlaced vs progressive, but it’s still around unfortunately. It was a joyous day when, after years, I was finally able to standardize 720p60 at my current employer. For a long time we were stuck with 1080i because that’s what the Sony HDV cameras shot at and that’s what the network wanted for delivery.

Now we can shoot 720p60 to cards, edit in 720p60 and, if needed, use our Blackmagic cards to cross-convert 720p60 to 1080i60 on the fly for tape layoff. Most of our content goes to the ‘net and eliminating interlace from the equation makes it easier to get better looking video onto our sites.

What about bit-depth? I was looking into the bit depth capabilities of OS X after reading a review of Lion lamenting the lack of 10-bit video support. Apparently 8 bits per channel RGBA is the desktop standard for all major OSes, though I also found hints that with the right GPU, connected via DisplayPort to the right display, that 10-bit desktop monitoring was possible. There is unfortunately no technical specification to confirm this for any Apple hardware.

Broadcast gear and I/O cards like to tout their 10-bit colorspace, and rightly so since SDI is carrying a 10-bit signal encoded as 4:2:2 YCbCr. So even if ColorSync is giving you an accurate relative color rendition on the display, are you missing detail due to the 8 bit per channel limitations of the typical computer monitor? And does that matter?

Of course, if this is indeed merely a limitation of the OS and its host’s video capabilities, then accurate it-just-works 10 bit monitoring for FCPX might not be that far away.

Hey Philip. Great article that’s deep enough I’m going to need to read it again.

I’m sure most people like myself will at the end of it, be opening up their display preferences to see if they’re Cinema Display is Colorsync Managed. I’ve got a last generation ADC 24″. What process, if any, do I need to go through to calibrate it- any handy links?

You also talk about a ColorSync utility, any more info? Is this in FCPX?

Andrew, you’re right that most desktop OSs are currently 8 bit, so that will show slightly more banding than if the full 10 bits were displayed. It shouldn’t affect color accuracy significantly. Good thing is that, when the OS gets 10 bit support, so does FCP X.

OTOH, it’s probably already more accurate than using domestic tvs as monitors, which seems to be the defacto for bays I go into.

Marcus, by default all Apple monitors include a colorsync profile, which is generic for that model. If you want to be more specific, you will need to do a display calibration for that specific monitor. Better would be a calibration tool (colorimeter) that actually read the values out of the monitor and calibrated accordingly, but they are expensive. (It’s a good service business opportunity though). You can create your own colorsync display profile from System Preferences > Displays > Color then click the Calibrate… button.

Good stuff, as usual, Philip! And, as your posts so often do, this requires a recalibration of where my preconceived notions meet my years of experience. No calibrated monitors? No vectorscopes or waveform monitors? Madness! And yet, it all makes sense, and makes things easier for all of us. (Which is why some will reflexively hate it.)

I woudn’t say that it doesn’t require calibrated monitors – for color accuracy all monitors should be calibrated although the default color profile will probably get you closer than a domestic TV would.

sorry please explain 601 and 709 color space in relation to lcd monitors & adobe color space for example

would be good to get your 101 on this

There’s an enormous amount of material online about Rec 709 and Rec 601 colorspaces, that can do a much better job than I can. A monitor can either display a colorspace or it can’t and that’s not relaly got anything to do with the type of monitor. Adobe are big proponents of Colorsync/ICC profiles, but I don’t know if that extends to video applications.

Got to agree that a colour managed workflow has to be a good thing, and the capacity for FCPX to provide color critical output to a properly profiled device is great … but (sorry) what I don’t get so much, is why the FCPX designers decided that that was simply enough, stopped there, and so did away with any and all other broadcast monitoring options … because some of genuinely do need and/or want it. For example, those of us who DO actually use technical monitoring equipment and calibrated broadcast displays (despite the apparent lack of evidence of them in some of the places you’ve worked, they are nonetheless defacto standard in pretty much any network setting) and the lack of ability to be able to use that as is required is obviously limiting. For my sins, I currently work in the interlaced world of broadcast news, current affairs and documentary programming, and whilst heaven knows I wish interlacing would go away, it does permeate the corner of business I work in – so from acquisition through to delivery I work primarily with interlaced (HD) and nothing in FCPX currently allows me to monitor that properly. It’s frustrating to say the least.

In other words you wanted Apple to *limit* themselves to broadcast color spaces? Not web, not Digitial Cinema, nor any of the colorspaces that might exist in the next decade?

Interlace is a problem but field order can be tested inside FCP X and these days almost every piece of interlaced video you deliver is deinterlaced to go to the final screen. Horribly ironic really.

“In other words you wanted Apple to *limit* themselves to broadcast color spaces? Not web, not Digitial Cinema, nor any of the colorspaces that might exist in the next decade?”

Hell no Philip, I’m pretty sure my comment didn’t say or even imply that, you misunderstand me I think. I’m saying what they’ve done with color management is altogether a good thing … what’s limiting is that they’ve seemingly decided that FCPX is to be a “future ready” solution only and simply does not need not be backwards compatible. Or at least thats how it looks from the version 1.0 perspective. What I’m hoping is that this is though just a version 1.0 decision … as I’d dearly like to see FCPX incorporate the best of both worlds ie exactly what they’ve done PLUS supporting a broadcast monitoring output solution for those that still need it. I don’t see that as limiting.

Re interlacing and its persistent prevalence in so many major broadcasting pipelines despite the fact that almost everyone at home is watching on an a natively progressive display these days … yeah, don’t get me started!

Cheers

Andy

Ok, got you now. Sorry about the misunderstanding. However, there are design decisions that have to be made, and any product – doesn’t matter if it’s a chair, car or NLE – has to be designed for one customer. Trying the “one size fits all” (ie legacy support plus forward looking) will end up with something that really isn’t good at anything.

If you’re finishing for broadcast you are/should be finishing in Color/Resolve etc where you still have full monitoring and truly appropriate finishing tools.

On a rough guess I’d say less than 10% of FCP 1-7 productions ever went to broadcast. Lots still finished to DVD but even that’s more forgiving (you can deliver 23.98 pure on DVD, not on broadcast).

I honestly do not believe FCP X will ever have that traditional video pipeline through the product. From my current understanding we could have a video pipeline (traditional outputs) OR we can have a color managed pipeline. I’m not sure we can have both because there is no color management in video streams. (Where “color management” was inherent in the pipeline as long as nothing changed.)

Coming in early 2012

***Broadcast-Quality Video Monitoring***

http://www.apple.com/finalcutpro/software-update.html

Hurrah … thank the gods for that 17% Mr H. 🙂

Only too happy to be wrong about this one!

Hi Philip,

and thanks for great article! I think video hasn’t ever looked so good on cinema display that it looks now with Final Cut Pro X. Even that we can get accurate colors one thing is interlacing and another thing is 24fps, 25fps and other non 29,97/30/60fps frame rates. Basicly all computer LCDs are 60hz so 25fps video (in PAL world where I live) is newer as smooth as on real 50hz display. Of course if you’re delivering web it doesn’t matter but broadcast guys still want to see on broadcast monitor with 100% frame accuracy.

Best

Tapio Haaja

Philip. It’s nice that Apple is finally starting to integrate colorsync in with its video editor. After 10+ years of ridiculous gamma issues, it’s nice to see them try to fix something.

However, colorsync, by itself, isn’t a complete solution.

In theory, yes it can take a YCbCr and convert it to LAB from which it then converts it to RGB using its LUTs to ensure the targeted display is displaying the original YCbCr image correctly. But it cannot offer any insight into the condition of that monitor. Nor will it stop your workflow to tell you that the monitor doesn’t support the full gamut needed to display Rec601/709. It can’t even confirm if the monitor is properly calibrated. And no monitor for broadcast – in a serious broadcast setting – is ever assumed to be calibrated when installed from any factory, whether it be from FSI or Sony. The environment alone effects the perceived display characteristics, and if you’re remotely serious about color-correction, you can’t color correct without some sort of calibration (and, no, Apple’s little “calibrate” utility in the Monitor settings is not enough – even Apple’s white paper on their cinema displays agreed with that).

So the real conundrum is that colorsync is just one link in a chain that needs to be perfect in order to be useful.

The other link is the actual display and what gamut and contrast it can support. To date, I don’t believe Apple makes a single monitor that can support 100% AdobeRGB. I know a few macbookpros that can’t even support 100%sRGB (which would be a requirement to displaying full Rec 601/709 gamut). I’m assuming the same could be said for many of the iMacs. So the first thing you need to tell your readers is that if their display can’t handle the range of color specified by these Recs – which also coincides to the same gamut as sRGB, then colorsync isn’t going to help them one little bit.

There’s another issue here as well. Colorsync. How well does it work? Apple completely revamped it in 10.6.0, and it broke the way 3rd party profile/calibration software interacted with Mac OSX. Not untii 10.6.2 was compatibility restored. And now, it appears to be breaking for people again. I’ve seen reports where people are finding their displays going quite dark after running profile checks through calibration methods that have become industry standard. They are having to build their profiles using older versions of IIC profiles in order to let FCPX understand what their monitor is really doing.

This is hardly inspiring. First Apple removes the option to even have a broadcast monitor to evaluate a signal that is going to broadcast. And then they tell us we should have faith in colorsync, while completely ignoring that the chain is longer than that. And, to compound that, they can’t seem to support industry standard ICC profile developments to boot.

Assuming FCP X is doing a good job managing YCbCr colorspace within its own operations, and assuming Colorsync is doing a good job of converting YCbCr to the appropriate RGB values as specified by whatever profile we select, if the monitor cannot display the full gamut, or if Colorsync cannot properly interpret ICC Profiles brought in from legitimate calibration tools, then we are still flying blind. Seems like a lot of trouble for something that’s supposed to make things simpler.

Actually, I’m shocked that you seem to treat the need for true broadcast monitoring so lightly. But if you are surviving, then who can complain. I’ve seen the results of one broadcast done from an FCP X edit. The outdoor footage had some pretty blocked up shadows – almost like the editor was color correcting from an LCD/LED colorsynced display that didn’t have the capacity to represent black. In all this discussion of gamut, we haven’t even touched on contrast and how that effects picture as well.

It won’t be long before that station’s engineers realize they’re broadcasting an inferior picture, and trace it back to the FCP X suite. I wonder what’s going to happen to that editor’s reputation? $10’s of thousands of dollars spent on production, and he thought he could trust colorsync?

I want to close this comment, however, on your reliance on the ACES initiative as some sort of proof that broadcast monitors aren’t needed. That is a deceptive argument. Theater standards should be designed around the needs of projection. Rec601/709 is built around the needs of broadcasters. When you work up a print, at some point it gets printed and compared to the original approved image. An image set for broadcast should also be approved by seeing how it looks on a calibrated monitor that can display the full YCrCb gamut defined by the needs of the broadcast community. You insist that colorsync takes care of that – even with an uncalibrated monitor. Unbelievable. But moving on, we can logically see that a digital film should be viewed under the standards set for by the ACES for theater projection – completely different venue.

Have you read their specs? 16-bit Tiffs with a minimum of 12-bit color (10-bit if being projected from 2K at 48 fps). You do realize that the Mac OS, with its fascinating pursuits in gesture technology, can only support an 8-bit color pipe? So when you say that you can dial in DCI-P3 to get an accurate representation on your computer monitor for footage destined for theater – you’re wrong. Your little display with your little graphics card can’t handle it. Because colorsync is blind. All it can do is convert color space, but it can’t verify that the results are actually working. And if you were color-correcting these files, which generally come from sources with gamuts larger than even AdobeRGB can describe, from within FCP X, you’d be making all kinds of blind color decisions. And you wouldn’t even know it.

It’s sad to say, but Apple has this galling philosophy that assumes people will only buy from them when things are made simple. Their assessment of their clients is a pretty low view. But judging from your comments, based on conversations you’ve had with contacts at Apple, I’m beginning to think that there’s another reason they love to make things simple.

Perhaps it’s because they are simple themselves. It’s obvious their view of our industry is.

While you’re completely correct that a tiny percentage of Final Cut Pro 1-7 users did put a broadcast monitor on their system, very few of those every calibrated it.

With FCP 1 those of us who were there then (and I suspect you were not) were constantly reminding people to even use any external monitor. and as I noted in the article, for two movies monitoring was on Cinema Displays via a AJA SDI to DVI converters; two TV series were cut on domestic class monitors and for several dozen pieces for DVD extras for a major studio were “color corrected” on a 15 year old domestic TV. Another client has done three Cinerama restorations and used a domestic monitor.

I’m sure for the niche that did care and calibrate high quality monitors the changes in FCP X are annoying and seem like a step back. OTOH, for the majority, who mostly don’t even have any video monitor on their system, this is a serious step up.

And I’m not disagreeing with anything you said, other than the fact that most FCP users will be much better off with the color management in FCP X because few FCP 1-7 users are in broadcast or will ever have a single thing they made broadcast on, even, cable. It’s a realistic view of the industry as it exists, and works within that.

Surely you’d be kidding yourself if you thought that any LCD monitoring screen could get close to describing an image in 10bits anyway. The older cinema displays screens were 6bit. Current Eizos NECs etc are still 8 bit panels (the internals are higher) and the older reference CRT screens wouldn’t have been close either.

the important thing here with colorsync is the consistency footage and machines. and that was pretty much all you were getting with a “broadcast monitor” anyway.

being able to hardware calibrate to REC709 and get a very decent preview on a high end screen has got to be a good thing. And being able to accurately proof different screen targets as you can with photoshop for images would be unbelievably useful.

Great article Philip.

But what about color accuracy on iOS devices? It’s rumored that next year’s iPad will be 2K. I can see some people trying to use it as a third display. SpyderGallery (http://www.wired.com/gadgetlab/2011/05/spydergallery-brings-color-calibration-to-the-ipad/) will supposedly give users the ability to color-manage their iPad. What do you see as the pros and cons for using the device in post?

Way too unproven as yet Eric but there’s definitely software out there to put the display from FCP X to the iPad – with a hi res iPad that would be amazing.

Brilliant article bro. Just what I was looking for though it threw a stick of dynamite into my purchasing plans. Your help would be invaluable to me and would surely save me money if you can answer some questions please.

I am about to upgrade my entire studio. My budget is apx $50k. The construct is a Mac Pro topped off to max. The project is a feature length, live action documentary. Scoring and mixing will be done elsewhere. Editing, motion graphics and grading will be done on the unit by me. I want the best color accuracy and presentation I can get. FCX will cut, Motion will create and Resolve will finish. I am already all digital. I have both the Ninja and Hyperdeck. My Q’s for you are:

1. What should I shoot in? I have been shooting in Pro-Res 422 (HQ). Is this the best? I want to avoid interlaced entirely.

2. Any suggestions on a camera with a budget of 6K?

3. I can afford any card I want. Or even two. Is the Red Rocket even worth it? Seems so damn expensive. I am very kinky. I can swing both ways. Color or Resolve both please me well and I am certified to fondle them both.

4. What Display would you suggest? I am getting two. Three if you answer no below.

5. Should I even buy a Broadcast monitor with your suggestions for the above?

6. I will “not” be capturing directly onto the Mac. Not ever. So I don’t need any capture cards. However, is there anything from Black Magic that you would recommend? Or do you suggest I change any part of my workflow? I may however, decide to shoot directly to A MacBook Pro on set. Not sure yet. Depends on my DP.

My goal is (1) All-digital capture. (2) Quick transfer. (3) Accurate Color (4) Putting FCX to the test. Time loss is my biggest phobia and I will spend ridiculously to avoid it.

Any help would be tremendously appreciated and I will gladly give you thanks when the credits roll.

Nick A.

Writer, Director, Colorist, Editor and Producer on 2012’s Resistor.

There’s a day’s consulting work in those questions! But some short answers.

1) Shooting directly to ProRes 422(HQ) is going to give you one of the best quality signals for any recording medium, so a good choice. Be sure to choose progressive formats though – ProRes does support interlace.

2) Lots of good choices under 6K.

3) Red Rocket is only useful if you acquire RED footage, which you cannot currently do for under $6K – until they release Scarlet RED don’t hae a camera in your budget so a Red Rocket would be pointless.

4) One of the best, reasonably cost, displays is the HP Dreamcolor.

5) If you’re working with FCP X, you do not have the choice of a broadcast monitor output, so that’s not possible. You monitor, using ColorSync on that Dreamcolor monitor. Same as for question 6. Can’t be done with FCP X so don’t plan on it.

Philip

Hi Philip,

Just wanted to jump on here and voice my approval of bringing the idea of color managed workflows to light and how Colorsync appears to be a well designed piece of the puzzle. Also to note appreciation of your recent additions to reflect the supreme importance of having a high quality monitor you can trust.

After all, color management is an awesome tool to keep things in check throughout a workflow/pipeline, and even to the point of creating deliverables, but any operator who is put in a position of making color, lift, gamma and gain corrections (whether they call themselves a “colorist” or not, has to make those adjustments not just based on knowing how to read scopes, but based on their very *human* eyes tell them while viewing a monitor. If the monitor is telling those eyes a LIE, or perhaps something less than the whole truth, then that operator/artist is not operating with good information. In other words, color grading is a subjective art. But that artist needs to trust that monitor.

Any monitor used for grading really needs to be calibrated to an objective a) the ability to accurately reproduce a designated, calibrated reference standard, or come darn close. All monitors can receive a calibrated signal, not all can be hammered into faithfully reproducing it. Thanks for clarifying the need for trustworthy, high quality monitoring.

I don’t buy it. A computer monitor simply does not have the physical capability to be as accurate as a broadcast monitor. After speaking to the folks at Flanders, this all sounds like hyped up pipe dreams if it’s supposed to replace broadcast monitors.

And Flanders would be the impartial one here? 🙂 Nowhere did I say that this replaced a broadcast monitor, except within an FCP X workflow, where working with a good 10 bit monitor like the Dreamcast is going to get you quite close – close enough for all but the most critical needs, and those won’t be finishing in FCP X anyway. For the workflows that FCP X is great for, color that doesn’t get “broken” through the pipe and is as-accurately-as-possible on the display on hand: better quality displays will naturally be closer.

For the majority of FCP users (yes, FCP) this will be a step up from no video monitor at all, or a domestic TV of varying kinds. If you need that level of control then you’re in Resolve or Smoke or something that has the video output you need.

So high end photo retouching requires less accuracy than broadcast does it? Many of the high end colour reference LCDs get close to the full NTSC gamut and beyond. Something very few “broadcast monitors” are capable of. Especially a huge number of screens which are old and have been in use in post facilities for ages.

Screen technology has moved much quicker than these screens have been replaced.

Is this the same FSI that sells ‘8 bit’ monitors that are ‘good enough’ for most things; some mistake surely?

Dear Philip

Would you mind asking your Apple contact what sort of ColorSync processing they are actually doing?

ColorSync can do a simple 1D LUT – which can compensate for gamma – but really fails hard when it comes to accounting for the difference in colorimetry between an Apple display and a broadcast Rec.709 display.

ColorSync can also do 3D LUTs. You NEED 3D LUTs or something of a similar level in order to accurately display a video image on your laptop. DreamColor and Flanders displays do this in realtime. Finishing software works with 3D LUTs as a matter of course. It’s an industry standard. 3D LUTs are also what ColorSync uses to navigate the difficult waters between print profiles etc.

BTW, not all ICC profiles have 3D LUT info in them. Take a look at them if you don’t believe me. But look at a good printer profile and you’ll see a wealth of information. Often in a 3D LUT-like form. Because, you know, it has to actually WORK.

Based on my testing so far… I’m pretty sure that FCP X’s ColorSync im doesn’t support 3D LUTs. Which sucks. But is not surprising, seeing as Apple has historically touted half-assed “solutions” (“Quicktime manages 1.8 2.2 gamma for you!”) which don’t work properly and actually get in the way instead.

If Apple isn’t prepared to do things properly… they could just allow us to turn ColorSync OFF and let us manage things via a 3rd-party plugin.

Instead, now if we use a 3rd-party solution, it’s going to not only have compensate for your monitor… but compensate for Apple’s stupid ColorSync. Sounds like a recipe for banding to me.

If Apple actually have implemented GPU-based 3D LUTs, then awesome. But I think they would have advertised it if they did.

Instead I suspect that they are selling you half-baked goods. And I suspect that you can’t tell the difference because you seem to be living in an entirely un-calibrated, broadcast monitor-less environment, Philip.

Otherwise… I’m rally enjoying reading your blog!

Bruce Allen

I don’t have explicit information about 3D LUTS in ColorSync. The transforms are GPU-based and 32 bit float (as is all processing in the “linear light engine” that the new apps share)

And in 18 years in Australia making quite a lot of broadcast Televison, not once was a broadcast monitor in the setup and never was material rejected either.

I get the feeling that, even it could be proven that ColorSync worked perfectly in FCP X (with no QT legacy to deal with) I think that a group of people won’t accept it unless it shows on a display that people pretend (frequently) is calibrated and is in a perfect viewing environment, because the monitor alone does not give you much of an improvement, unless the environment and lighting are painted and lit accordingly.

Um… really. Really enjoying your blog.

Not “rally”. Sorry!

Bruce

1. GPU-based 32-bit float linear light doesn’t matter if the underlying algorithm is insufficient – eg if it’s just a simple 1D curve on R, G and B.

2. What worked in the past may not work now.

Historically, computer displays have been much closer to TV standards (sRGB and Rec.709 share the same color primaries).

But recently, computer LCDs (incl. Apple) have moved towards VERY saturated reds, for one thing. Which can really throw you off.

We use uncalibrated monitors at our workplace too – designed title sequences and trailers for a lot of movies on them… with a great variety of color (from Captain America, Thor, Inception, District 9, Rio, Crash, The Smurfs, Clash of the Titans, Underworld to Ocean’s 13, Borat…)

Our renders went to a final online bay, but 90% of the time colors looked fine and they didn’t touch it in the finish.

Now, with modern LCDs, we are screwed. I have switched over to a color-managed solution, while my art director has kept his ancient Apple LCDs “because the colors are awful on the new Apple ones”. Our broadcast dept has bought Flanders Scientific stuff to check everything.

So, um… “not calibrating worked for us before” is not a very good argument.

Of course, if you’re a creative editor (doing stuff that’s going to be finished or QC’ed on another system), calibrating may not be necessary. If you’re doing stuff for the Web (where you clients will also be watching on a Mac monitor), calibrating may not be necessary.

But then Apple are just making things worked by offering a “solution” to color management if it doesn’t actually work.

Either:

a) you don’t need ColorSync because you don’t need an accurate simulation of Rec.709 color to do your work

or

b) you need something better than ColorSync because you do need accurate Rec.709 color and ColorSync is crap and now is just another thing that gets in the way between you and a proper solution

I am still failing to see the point of Apple’s current implementation of ColorSync.

It’s like they wanted to do it right… then someone said “um, iPad hardware can’t do 3D LUTS and we want to port FCPX there next… so let’s not do it properly. Let’s just do a hack and tell everyone their Colors are in Sync. People will trust us if we brand it as ColorSync, which does do things properly for print. They’ll never ”

Bruce Allen

for the vast majority who never used any video monitor or used a domestic TV, the ColorSync method will be an improvement on their current workflow. For those who insist on a broadcast monitor, it’s coming “early next year” so you can have the best of both worlds.

Um, most domestic TVs have more sophisticated color management than a simple 1D LUT curve.

So if ColorSync only uses simple 1D LUT curves… using a domestic TV will be very likely still be more accurate.

Broadcast monitor support would make a big difference. Hopefully you can turn ColorSync OFF though… or else we might have some situation similar to FCP7, where gamma just gets screwed with in an unpredictable manner?

Bruce Allen

Hi there,

First, thank you for your instructive post Philip.

Problem is even though FCPX should beautifully deal with color management as you describe, I am experiencing gamma issues with it.

Here is a link to screencaps I made on a MacBook Pro with a calibrated screen using Spyder 3.

http://ronfya.free.fr/ronfya/all_over_the_web_data/pics/gamma_problem_comparison.jpg

First row are what the video looks like in the Final Cut Pro X monitor and Premiere CS5.5

Last column is the video straight out of cam viewed in VLC, MPlayer, Quicktime X and Quicktime 7

Others are exports from FCPX and Premiere with the defaults H.264 settings and viewed in the same players.

After a close comparison of the screen caps, switching on/off one over another, I grouped the matches in color groups to describe the problem :

Green, yellow and red groups are nearly perfect match within groups. (and in the case of greens, it is probably 100% perfect because it’s apple QT)

A rough classification from dark and saturated to bright and desaturated would be : RED, BLUE, YELLOW, GREEN, PINK.

[BLUE & YELLOW] are different but very close, [GREEN & PINK] as well. I can live with that. No problem.

But RED is very different from [BLUE & YELLOW] which are both very different from [GREEN & PINK]

The strangest thing for me being the export from Premiere which displays differently in QT7 and QTX.

And the [GREEN & PINK] group, which includes the FCPX monitor is washed out in comparison to the [BLUE & YELLOW] from Premiere and even FCPX export in QT7.

Notice that in QT7, if I check the “Enable Final Cut Studio color compatibility”preference, then the exported video from FCPX is also displaying well in QT7.

Do you have any help on this ?

Thanks

I would have no expectation that the colors would match between the one color managed application (FCP X) and five non-color managed applications. Since VLC, MPlayer, QT X and QT 7 and PPro do NOT color manage, their displays are unreliable.

Sorry for the earlier curt reply – last week was a busy one. Now I’ve had time to look at the examples, it seems that Color Sync does work!

The three apps that claim color management – FCP X, QT X and QT 7 (although QT 7 was never perfect by any means) – seem to have reproduced the same image faithfully, and the two QT players apparently picked up the ICC color profile from the camera and used it for ColorSync.

Premiere makes no claim to color manage that I know of, so it’s a bit of a random result. Neither do VLC or MPlayer so the results are consistent with expectation.

Nice work.

As I explained further down here, you have to use a V2 ICC profile with the Spyder3.

Color Management seems to be more of a joke in FCP X. I did not manage to make it work with Basiccolor Software and the Spyder 3. All I get is strange colours (green instead of white etc.)

I’m not sure what you’re testing but the ColorSync color management in FCP X is rock solid. Images display correctly on each device with a color sync profile.

Mine are most certainly not with a V2 ICC profile (V4 is not supported – which is another joke) from Basiccolor at all. Other people tell, that they had problems until they switched to a table-based profile to a matrix (which is the even bigger joke).

So please don’t ignore something went seriously wrong with “color management” and FSX.

sorry, swiched from table-based to matrix-based

further reading:

http://photo.it-enquirer.com/2012/03/26/dont-use-table-based-monitor-profiles-for-colour-calibration/

http://chrismarquardt.com/blog.php?id=7379490227447333704

The new iPad is 99% Rec 709, which earlier tablets were not. The *only* type of color profile that FCP X supports is ColorSync. No other profiles are claimed to be supported.

ColorSync is not a profile but a technology. I recommend reading something more about color management and ICC profiles.

So I figured some things out:

Final Cut Pro X only

-supports V2 instead of V4

– matrix instead of table-based profiles

– 2.2 gamma instead of L*

All in all you could say, FCP X offers a very basic color management.

So, how much worth is a program not able to work with state-of-art profiles? Or, to say it in other words, not able to reproduce colors the way they are meant to be?

It works with the profiles that 99.99% of the world uses. If you’re in the tiny, tiny percent that needs something more, you’re out of luck with FCP X. Oh, and with Media Composer, and Premiere Pro. Of the leading NLEs fCP X is the only one that claims any color management.

And btw, I”ve done quite a bit of research on colorsync and FCP X including close correspondence with the development team. Whatever I’ve written has been checked for accuracy before publishing with Apple.

If you use any modern color management solution, either from Datacolor, X-Rite or Basiccolor to name the most dominant you will get a table-based profile, in many cases with a L gamma.

You are absolutely right, that FCP is the only one that “claims” color management.

And the testing I’ve seen, is the only one that consistently displays color. Might not be the niche color workflows you desire, but better than any other tool for the 99.9995% that don’t need or use Datacolor, X-Right or Basiccolor.

Heck with FCP 1-7 most people didn’t use any monitor at all, and of those that did the majority were domestic Televisions via a DV adapter.

I appreciate your interest in this niche, but it’s not representative of Adobe and Apple’s customers, and isn’t’ available for Avids.

Sorry, but your knowledge of Color Management Solutions seems to be rather limited.

X-Rite and Datacolor are the only two vendors of mainstream colorimeters. (The thingies you need to calibrate your monitor, in the first place you know)

Calling that “niche” is like calling Word a niche application.

I call it niche because they count their customers worldwide in the hundreds or low thousands. NLEs sell in the millions. The entirety of broadcast and film production is a niche – there are only 25,500 “film and TV editors” in the United States according to the Dept of Labor statistics. Like it or not but you’re a very small niche. That’s reality.

OTOH FCP 7 had 2 million seats/1.5 million unique customers; Media Composer has over 300,000 seats. PPro has similar numbers of installs to FCP. 25,500 in that context is a tiny, tiny niche.

It was you writing an article on color management. Ok, might be a niche but then, whats the point?

Apart from that: For a the little gamut of a TV you still need a TV to check. Only a YCbCr color space emulating monitor would be able to do that.

Calling their customers count in the low hundreds or low thousands? Every professional photographer I know has a colorimeter from them. Didn’t know there where so few in the world…

Given that we were talking exclusively about software NLEs – specifically color management within FCP X – bringing in photographers to this discussion is irrelevant.

Good luck with everything.

I know lots of people doing both, videography and photography and they are very pissed off by strange results. See the posting of ronfya for instance.

And it is no good sign, if Apple approves a text full of errors.