Last night we arrived back from the Solar Odyssey as our involvement finished on Sunday (long story shot, “creative differences”) and today my welcome home present to myself – a nice new MacBook Pro retina. Naturally I immediately updated to Mountain Lion. (New machine, new OS, might as well get it all over together).

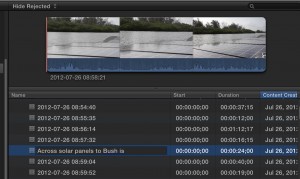

One of the first apps I was looking at was Final Cut Pro X – very nice to see a full size 1080P signal in the Viewer window 1:1 and still have a whole heap of screen real estate. (My confession is that I’m using all the pixels 1:1 not in Retina mode. I already have to wear glasses for a computer screen so I might as well capitalize on it.)

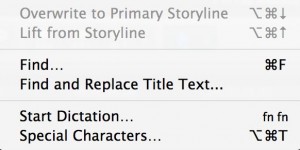

Of course I look through the menus and what do I find in the Edit menu but:

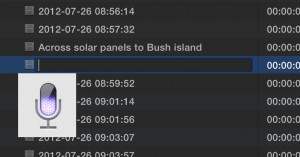

Interesting I think. Start Dictation? Why yes. Just hit Fn Fn (Function twice) while in an active text entry window and up comes the Dictate.

And it does work – mostly.

It seems to work with simple phrases but longer text took too long to process to be truly practical for a means of data entry. (Now, we’ve already been using the iOS Dictate function on the new iPads we’re using in production for our new logging app, and that works fine for a comment in the field.)

To be fair, this is a Mountain Lion feature and it even works in Final Cut Pro 7! Â Presumably therefore, it will also be usable in Premiere Pro, Prelude and Media Composer on OS X Mountain Lion.

And we all get it in every app for “nothing”. No developer had to rewrite a single line of code for users to benefit. On a fast network and simple phrases it does work.

18 replies on “Final Cut Pro X and Mountain Lion – now that’s interesting!”

Wow, haven’t gotten it to work even once on my retina MBP, simple short phrases like “wide shot”. Needs to be beefed up imo.

Re-started FCPX, now it works. Cool.

tip: to grab a screenshot of a fickle UI element such as the dictation popover, you can capture a quick Quicktime screen recording, and copy (CMD-C) the correct frame from the resulting movie file.

Yep, I could have done that but the kludge was quicker in this instance.

Now that will come in handy…

Confirmed for Premiere Pro CS6 and Prelude. Great idea Philip!

As “fun” as this is, I personally don’t ever see myself using it in this context. Mostly because it makes too many mistakes here and if I have to go in and correct any mistakes, I’m much faster simply typing it to begin with.

BUT… wouldn’t this be superb for AUDIO TRANSCRIPTIONS??! How could Apple have this and NOT make it available within FCP X for just that? Make a “Transcribe” feature where the audio from any given clip is uploaded to Siri and comes back as metadata in the clip! SOmething that Apple of all people should be able to implement in a heartbeat, no? Or is there anything on a technical level that would somehow make this a no-go or impractical or even impossible?

THAT would kick some serious NLE butt… 🙂

Agreed. Right now Dictation in a text field is a little slow to be really useful (although it does work well in the Logging apps).

And for sure we’d love the ability to send an already recorded file to be transcribed by that technology. The technology is not yet open for third parties to send existing files to the Dictation servers, but who know if/when that will change.

In the meantime, use Hollywood Tool’s VidKey.

Sure, additional 3rd party access would be great (but I’m guessing pretty unlikely for a long while yet, due to the traffic that Apple would have to foot the bill for, server maintenance etc.), but really my point was APPLE should simply enable in within X. Something along the lines of a “Send to transcript” command. The clips audio is sent and it comes back and is automatically entered into the notes field or even better, a whole new transcription field! THAT would be brilliant!

I’ve in fact been testing it for some interview clips I have by simply playing them loudly with dictation active. The results are FAR from perfect, but useable and better than nothing or having to do it by hand either way. Only it’s a huge pain, since you have to start the clip, switch to your text app and activate dictation etc.

And I completely don’t get what Hollywood Tools even IS! It has a description of what “Keywords”, as it’s called, DOES, but I have ZERO clue as to what it is?? An APP?? If so, where do I get/buy it?? Standalone or plugin?? X compatible?? Is there a demo?? Is it a SERVICE?? If so, how do I get IT?? What about foreign languages (that I need)?? English only??

Extremely poorly done website, I gotta say. No idea how they’re making money with that. They’ve already completely lost my interest.

It is either a service you set up on your own site/server or software-as-a-service on their server. You get back keywords with time ranges.

I agree there definitely could be more information on the site. I think currently English only.

So you get your own private Siri?? 😀

It would also be extremely helpful if they illustrated exactly what it is I even get when it comes back in some way shape or from. And again, if and how it’s in fact of any use to me, be it in the context of X or not.

But whatever. As I said, I’m not interested anymore anyway and they don’t appear to want to sell it either…

I’m experimenting with it for writing emails and parts of the many, many, many articles and scripts I have to write. I’m finding correcting it’s mistakes is still faster than typing from scratch (and I’m a super fast typist). Just wish I could do a full paragraph before it timed out.

Sure Ben. And we’re all really happy that you’re not completely full of yourself.

Good day, I had a problem after the update to Mountain Lion.

If you change the speed of the video is visible strobe, and Optical Flow does not help. Please write about this issue. Thank you.

As I haven’t experienced the problem, I can’t really write about it. I trust you submitted a bug report with Apple?

Very interesting indeed. Love the transcribing video idea.

Andy, let’s distinguish between Nuance (the Dictation/voice recognition part of Siri) and Siri. Siri adds human-like interpretation and interaction with web services to bring answers or do stuff. It’s a huge advance which is why it’s still beta.

Vidkey (now known as Hollywood Tools Keywords) does – afaik – a “transcription” (not good enough for use as a transcription) from which keywords are derived and applied to a range.

Definitely not Siri class, at least not yet.