I think it’s important to reiterate that “production” is an umbrella term, covers everything from big budget feature films, to corporate, education, YouTube and TikTok. Tools designed for high end feature film production, usually with large crews, are probably not going to be at all relevant to a Tom Scott producing informational videos on YouTube! Solo, and small production crews will benefit most from their efforts being amplified by ML, while the immediate affect on the next Marvel blockbuster will be negligible.

In between there are lots of exciting developments. Google had a research project in 2017 that automatically pick the best angle from a multicam shoot, or direct a single camera to stay on the subject. Carnegie Melon and Disney Research have a project that edits from among the many cameras at an event – “social cameras.”

More practically, there’s a slew of new smart camera mounts for consumers that track an individual and keep them on camera. One of the new features of Apple’s iPad Pro announced April 2021 is called Center Stage and the camera will automatically track one or two people in the images, and frame accordingly.

There are smart drones that are being trained to better follow performers, or even having autonomous drones do all the filming!

Then there is the question of what exactly is “production?” If we can create synthetic actors in synthetic sets, is that still production?

Even though production has shown the lowest infiltration by ML, there are still a lot of exciting tools coming.

Automated Action tracking and camera switching

The first time I heard of ML being used to direct and switch a multi camera live event was at an Entertainment Technology Center special event on AI in Film and TV Production back in 2017. There Google presented a research project that automatically edited live video by choosing the best angle, or direct the view of a single camera based on audience or player attention. Unfortunately I cannot find a direct link to this research.

The same ML Model can customize trailers for specific audiences, or even create personalized versions. They used Google Tensor Flow, Cloud Vision and Video Intelligence. No one single ML Model achieved the total goal, but several working together are able to handle some seemingly human tasks.

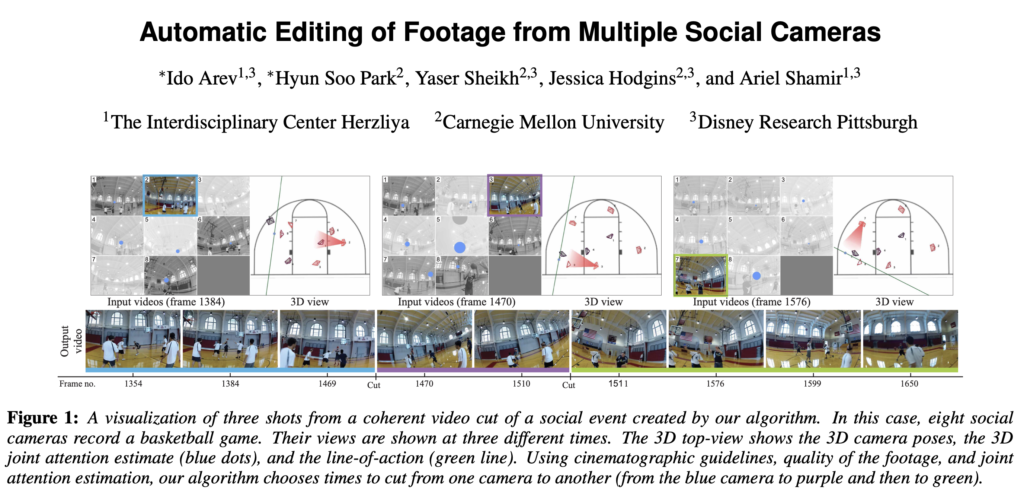

Audience interest also drives another search project “Automatic Editing of Footage from Multiple Social Cameras.” A joint project of Carnegie Melon and Disney Research, it seeks to edit from the many multiple angles present in a social event with ubiquitous cameras.

Image: Social camera editing

In the world of YouTube and TikTok, or other smaller scale or independent production, this is a huge amplification, particularly those projects that are out of the lab and available.

Smart Camera Mounts

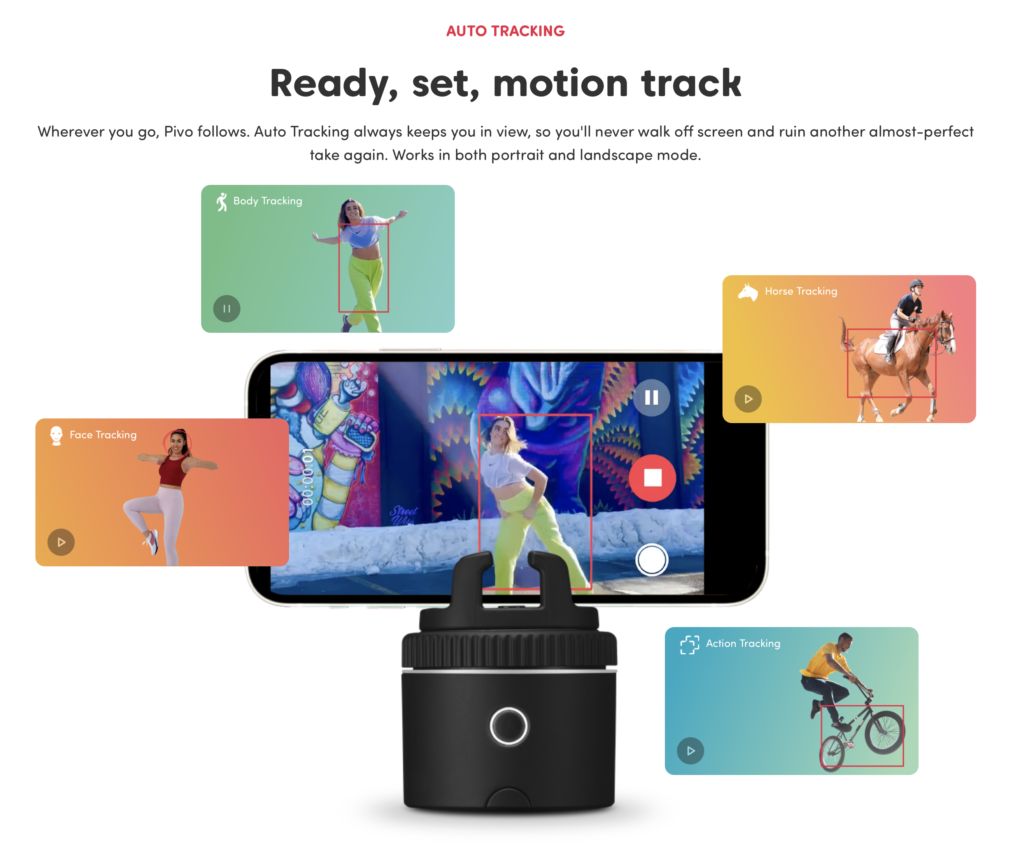

While you may not have been watching, smart camera mounts like Pivo or Pixo/Pixem have hit the market. Face and body tracking in the apps associated with the mounts uses the phone’s camera and processing power to auto track a presenter as they walk around. They also add special effect modes so talent can replicate themselves, etc.

My experience with a Pivo has been positive. This and similar mounts are very useful tools for solo presenters like myself, making it easier for one person to create content. It’s an amplification of the individual creative, which is the core of Amplified Creativity. The “machine” empowers human creativity that might otherwise lie dormant, particularly during a lock down when we couldn’t get together to shoot together.

Without Pivo I’d have to find someone to shoot for me, communicate to them the shots that I want, then review to ensure we got what I want. A smart camera mount has freed me to be more creative, more often.

Although not part of any shipping Adobe app, a blog post The State of AI in Video from January 2019 reveals the company’s thinking on the subject.

AI is also useful on the production side of video, with automated camera technology improving filming options and video quality. AI-enabled camera equipment reacts to gestures, recognizes and tracks subjects, and swivels to follow action or oral commands.

OBSBOT, is an “AI-Powered. Auto-Tracking Phone mount for use with any app.” It’s going to become a crowded market very quickly. As I said above, Pivo uses the camera and processing power in the phone, which is why it can do the many special effects modes. OBSBOT has its own camera and AI processing so it is fully self contained. The ability to work with any app – particularly Filmic Pro – made it attractive enough that I backed it while doing the research for this article. It’s working very well, until it loses sight of my face!

Even without the smart mounts, we can have automated camera tracking of one or more presenters. Apple’s iPad Pro, announced April 2021, has a feature integrated into the camera called Center Stage. From the press release:

“The new ultra wide camera on the iPad Pro pans automatically to follow you as you move around—and widens out if a second person enters.”

Autonomous Drones

While training to fly a drone for the ill-fated 2012 Solar Odyssey project, I saw a whiteboard in the drone company’s headquarters that had their software roadmap. It was obvious that everything I was training to do was going to be redundant within a few short years. Autonomous and flock controlled drones are now a reality and they’re getting involved in production:

New developments in AI technology are making it much easier to maintain framing (camera angle and distance) while filming movie sequences from the skies. Those in the know have already seen drones which can follow a person as they jog, climb or go biking but if you turn 180 degrees away from the camera, it will film your back.

This impressive drone-specific camera technology allows a director to determine a shot’s framing (for instance: a profile shot, straight on, over the shoulder, etc.). Incredibly, these framing directions are preserved by the drone when the actors move around.

A sci-fi film called In the Robot of Skies was made entirely with autonomous drones filming. Facial tracking and object avoidance using ML makes autonomous camera drones much more accessible than the days when I had to be the brains of the drone! Robot of Skies was pitched as an example of how solo filmmakers can amplify their production:

This example may provide a glimpse into the future where solo filmmakers on tight budgets can shoot entire projects without needing human camera operator.

You can see the Robot Skies teaser on Vimeo. This is another example collaboration between man and machine to create an amplified creative.

Similarly, at Cornell University they are researching whether a robot can become a movie director by learning “Artistic Principles for Aerial Cinematography”

In this work, we propose a deep reinforcement learning agent which supervises motion planning of a filming drone by making desirable shot mode selections based on aesthetical values of video shots. Unlike most of the current state-of-the-art approaches that require explicit guidance by a human expert, our drone learns how to make favorable viewpoint selections by experience. We propose a learning scheme that exploits aesthetical features of retrospective shots in order to extract a desirable policy for better prospective shots. We train our agent in realistic AirSim simulations using both a hand-crafted reward function as well as reward from direct human input. We then deploy the same agent on a real DJI M210 drone in order to test the generalization capability of our approach to real world conditions. To evaluate the success of our approach in the end, we conduct a comprehensive user study in which participants rate the shot quality of our methods. Videos of the system in action can be seen here.

Introduction, AI and ML, Amplified Creativity

- Introduction and a little history

- What is Artificial Intelligence and Machine Learning

- Amplified Creatives

- Can machines tell stories? Some attempts

- Car Commercial

- Science Fiction

- Music Video

- The Black Box – the ultimate storyteller

- Documentary Production

- Greenlighting

- Storyboarding

- Breakdowns and Budgeting

- Voice Casting

Amplifying Production

- Automated Action tracking and camera switching

- Smart Camera Mounts

- Autonomous Drones

Amplifying Audio Post Production

- Automated Mixing

- Synthetic Voiceovers

- Voice Cloning

- Music Composition

Amplifying Visual Processing and Effects

- Noise Reduction, Upscaling, Frame Rate Conversion and Colorization

- Intelligent Reframing

- Rotoscoping and Image Fill

- Aging, De-aging and Digital Makeup

- ML Color Grading

- Multilingual Newsreaders

- Translating and Dubbing Lip Sync

- Deep Fakes

- Digital Humans and Beyond

- Generating images from nothing

- Predicting and generating between first and last frames.

- Using AI to generate 3D holograms

- Visual Search

- Natural Language Processing

- Transcription

- Keyword Extraction

- Working with Transcripts

- We already have automated editing

- Magisto, Memories, Wibbitz, etc

- Templatorization

- Automated Sports Highlight Reels

- The obstacles to automating creative editing

Amplifying your own Creativity

- Change is hard – it is the most adaptable that survive

- Experience and practice new technologies

- Keep your brain young