While ML is making inroads into every aspect of production, it certainly seems like Visual Processing and effects show the most impressive results.

ML is already in upscaling, noise reduction, frame rate conversion, colorization (of monochrome footage), intelligent reframing, rotoscoping and object fill, color grading, aging and de-aging people, digital makeup, facial emotion manipulation, and I’m sure there’s more that I’ve missed.

Although they could be grouped under Visual Processing and Effects, I’ve chosen to group digital humans and deep fakes in another section. Similarly, there’s a separate section devoted to fully synthetic image creation.

If you think we’ve been able to manipulate ‘reality’ to create something new with traditional compositing and effects tools, just wait until you see what’s coming.

Noise Reduction, Upscaling, Frame Rate Conversion and Colorization

As these tools tend to be used together for processing old footage into a condition usable in a modern production, I’ve bundled them under the one heading.

An excellent example of the tools coming together, is The Flying Train: footage from 1902 Germany. Compare the original footage from the MoMA Film Vault with the 60fps colorized and cleaned version.

Pro Video Coalition did a comparison shootout of AI Upscaling Software.

Colorization

Colorizing monochrome footage isn’t anything new. I recall the horror that arose when they first started colorizing Black and White movies. In fact hand coloring of movies had been done way before then, but it was very manual and extremely labor intensive.

A new AI colorizing process takes the characteristics of the original film stock into account, and then introduces sub-surface scatter to make the image look like it was taken with a modern camera. That’s a little more sophisticated than a color wash!

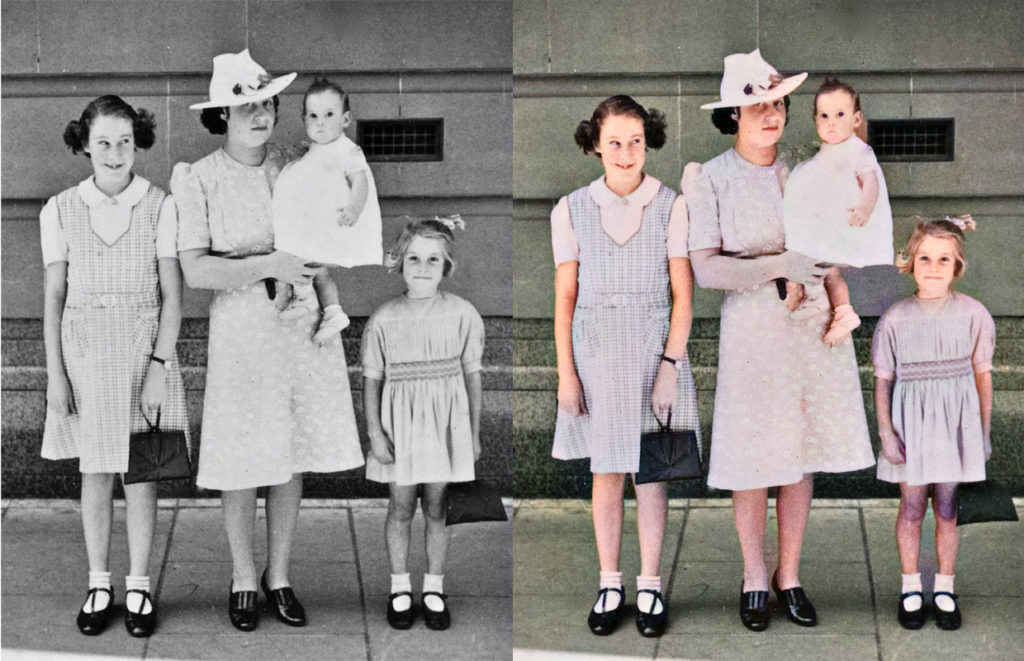

Or at a simpler level DeepAI will colorize film frame-by-frame from monochrome images, without the sophistication of imitating sub-surface light scatter. I had not realized how much my aunt (in this picture on the right) resembles my niece of a generation later, until I saw it in color. DeepAI reminds me more of the very old process of hand tinting black and white images with water color paints.

Upscaling/frame rate conversion

Frame rate conversion isn’t anything new. We’ve been using “optical flow” technologies for years, to create in-between frames that were never shot. The technology just keeps getting better, as demonstrated in this example of upscaling in this presentation of DAIN: Depth Aware video frame INterpolation. In the demonstration they take 15 fps stop motion animation up to a very smooth 60 fps. It’s another Open Source project and you can download it for a Patreon donation.

Intelligent Reframing

Intelligent Reframing comes in to play when we need to convert between video formats. Framings that work well for widescreen 16:9 aren’t always going to work in a square or vertical format. We’ve seen ML driven examples in both Premiere Pro (Sensei) and in Final Cut Pro, but if you’d rather roll your own, Google published an Open Source project: AutoFlip: An Open Source Framework for Intelligent Video Reframing.

AutoFlip provides a fully automatic solution to smart video reframing, making use of state-of-the-art ML-enabled object detection and tracking technologies to intelligently understand video content.

I don’t plan on writing my own reframing software, but the existence of an Open Source project strongly indicates that the use of ML for reframing is a known and mature technology.

Rotoscoping and Image Fill

While there are many tedious jobs in the field of production, surely rotoscoping for object removal, or cloning for image fill, must be at the top of the list. I remember the excitement surrounding Puffin Designs’ Commotion when it was released with the ability to automatically clone from the same place on other frames. It was a huge step forward that made a very, very tedious job just plain tedious!

It’s an area where research has resulted n practical solutions. Adobe’s entry into the “AI Powered” world of rotoscoping, Rotobrush 2, was a leap forward in reducing the tediousness of the job.

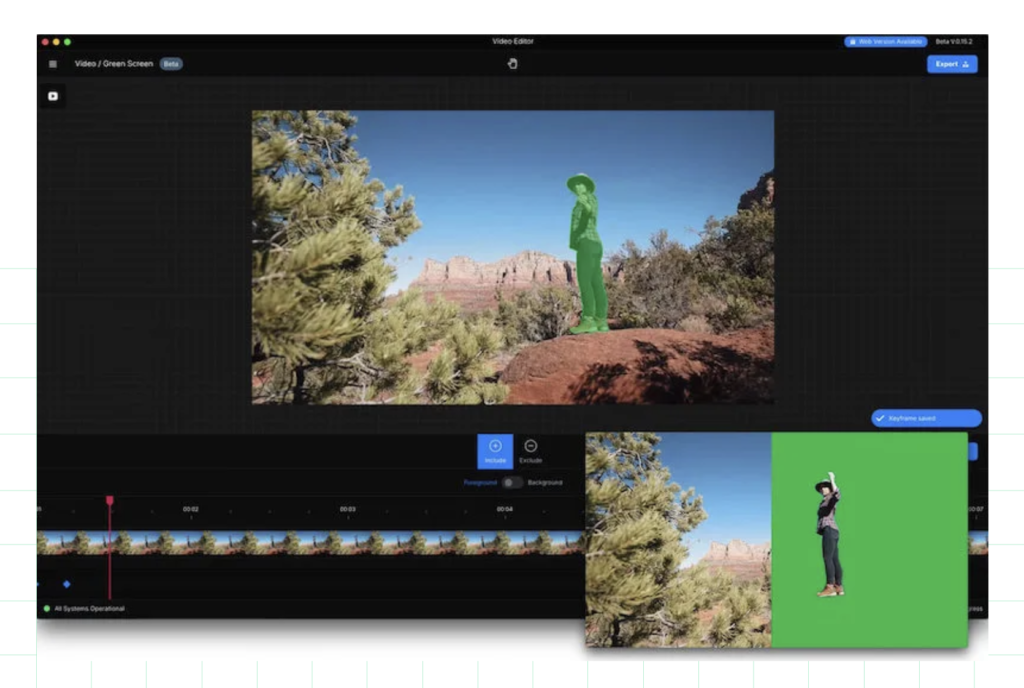

In this recent video, Rotobrush 2 came in a very clear second, ahead of b-splines and other rotoscoping methods. The clear winner in that showdown was RunwayML. A third party video has RunwayML rotoscoping three shots in ten minutes.

Keep in mind that ML models being used now will continually improve. Right now we have the ability to click on the object and it will be isolated, or removed and back filled with real imagery.

Within a week of my first draft including a reference to RunwayML’s ability to rotoscope and infill, they announced they were adding Smart Mask that tuses text to select objects.

It seems like magic but their (experimental) demo shows selecting a red car in the image, by selecting ‘red’ and ‘car’ in the text box! Want to select the sweater, click on the word sweater and it’s done. There doesn’t seem to be a way to link to the video but it was posted in their Twitter feed.

Since then RunwayML have added Depth Map extraction and Optical Flow motion analysis for effects specialists to use in their compositions.

Adobe previewed Project Cloak, in 2017 and it is now Content Aware Fill in video apps.

Another project previewed in an Adobe blog post IA Powered Creatives will Enable a Beautiful World. they reveal Project Puppetron, which uses smart editing to apply a distinct artistic style to not only a single image, but to an entire video.

I’m not sure if Adobe have competing AI teams, but the same blog reveals SceneStitch, where you can select an area of an image to remove, and “AI” will search for a new image to replace the area, and then blend everything seamlessly. And similarly with SkyReplace ML removes the old sky, searches a host of possible replacement skies, and blends them into the existing image.

Adobe’s philosophy behind these tools sounds a lot like Amplified Creativity!

With applications like these, it’s not hard to see how AI could become not only the ultimate time-saver for creatives, but a powerful, virtual creative agent.

Aging, De-aging and Digital Makeup

Not long ago, aging or de-aging an actor wasn’t possible. Then it was available in the rarified realm of high end film technology like Flame, which uses ML to create pixel correction and aging/de-aging tools. An article at FXguide outlines the many steps needed create these sorts of manipulations.

If you read through that in-depth article, consider that consumer apps like Snapchat and FaceApp are doing all the processing in real time, on a hand held device. There’s an amazing amount of power in identifying and tracking the facial features, then applying ML Models to age and de-age faces.

While it’s for still images in Photoshop, Adobe’s new NVIDEA and AI-powered Neural Filters include emotion editing! The new Smart Portrait feature:

…takes headshots into the Snapchat zone, giving you a bunch of sliders for things like happiness, anger, surprise, age, hair thickness, direction of gaze, angle of head and the direction of light on the face.

Many features appear in single image processing apps first, because of the challenges of keeping results coherent across many frames, but eventually the temporal coherency problems are solved and the tool moves from still to video. We can probably start manipulating emotions in video before 2024!

Being able to easily modify the appearance of faces in real time has serious consequences, and not just on dating apps! Magda Skrzypecka, wrote an article for ediblorial.com on the effects of face enhancing technology on social media and movies.

Color Grading

Arguably, up until now, most of the examples of ML in production have been to automate relatively mundane tasks. Where it has been most successful is in the symbiotic meshing of machine and man I’ve tagged Amplified Creativity.

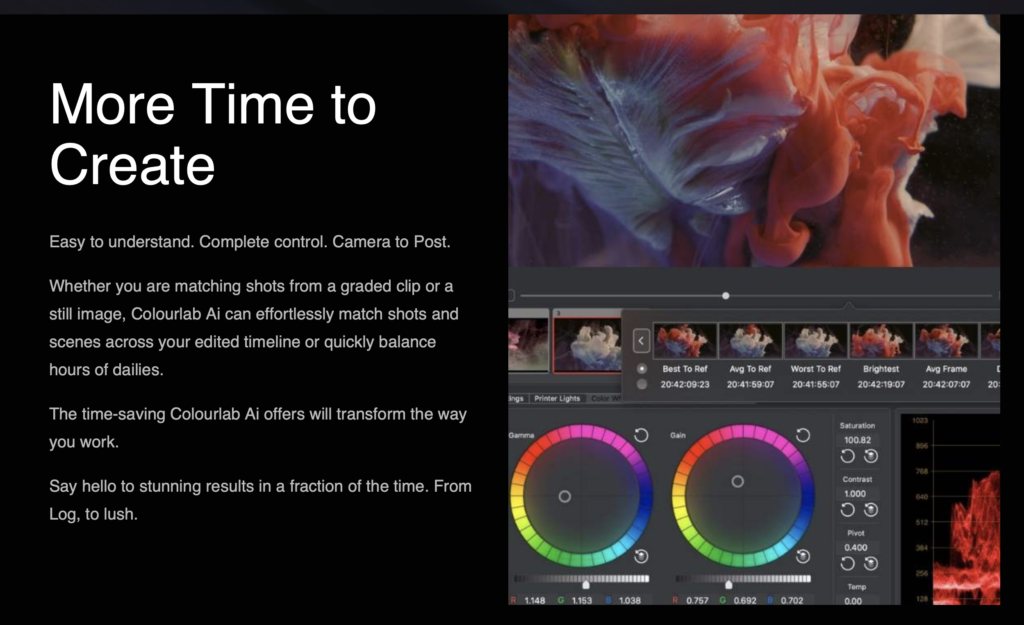

Now we move on to an application of ML that’s moving right into the creative realm. Colourlab Ai is AI powered Color Grading. It would seems that color grading requires that human eye, and it does. Like every other tool I’ve discussed the developers see Colourlab Ai as:

Colourlab Ai enables content creators to achieve stunning results in less time and focus on the creative. Less tweaking, more creating.

Although the overall job is highly creative, the camera matching and color matching parts are not. Once again we see the machine enable the human to be an amplified creative.

Introduction, AI and ML, Amplified Creativity

- Introduction and a little history

- What is Artificial Intelligence and Machine Learning

- Amplified Creatives

- Can machines tell stories? Some attempts

- Car Commercial

- Science Fiction

- Music Video

- The Black Box – the ultimate storyteller

- Documentary Production

- Greenlighting

- Storyboarding

- Breakdowns and Budgeting

- Voice Casting

- Automated Action tracking and camera switching

- Smart Camera Mounts

- Autonomous Drones

Amplifying Audio Post Production

- Automated Mixing

- Synthetic Voiceovers

- Voice Cloning

- Music Composition

Amplifying Visual Processing and Effects

- Noise Reduction, Upscaling, Frame Rate Conversion and Colorization

- Intelligent Reframing

- Rotoscoping and Image Fill

- Aging, De-aging and Digital Makeup

- ML Color Grading

- Multilingual Newsreaders

- Translating and Dubbing Lip Sync

- Deep Fakes

- Digital Humans and Beyond

- Generating images from nothing

- Predicting and generating between first and last frames.

- Using AI to generate 3D holograms

- Visual Search

- Natural Language Processing

- Transcription

- Keyword Extraction

- Working with Transcripts

- We already have automated editing

- Magisto, Memories, Wibbitz, etc

- Templatorization

- Automated Sports Highlight Reels

- The obstacles to automating creative editing

Amplifying your own Creativity

- Change is hard – it is the most adaptable that survive

- Experience and practice new technologies

- Keep your brain young