Beyond specific NLE implementations, ML is making it’s way into almost every part of the productions process: storyboarding, production breakdowns, voice casting, digital sets, smart cameras, synthetic presenters, digital humans, voice cloning, music composition, voice overs, colorizing, image upscaling, frame rate upscaling, rotoscoping, background fill, intelligent reframing, Aging, de-aging and digital makeup, “created” images and action, Logging and organization, automatic editing (of sorts), temporization of production, analytics and personalization, storytelling, and directing.

That’s a lot, and it’s only the examples that I’ve kept records of! I’m sure there are many more I’ve missed here.

This is a very detailed article, ultimately running to 12 posts. If you would prefer a briefer version I wrote an overview at the Frame.io blog. This version includes a lot more examples and references.

Based on recent headlines making all sorts of claims about Artificial Intelligence (AI) it’s reasonable to wonder if you job is going to be taken over by AI. Can any sort of machine do creative work? What sort of workplace will it be if AI takes over? In this article I take an in depth look at all the ways that AI and Machine Learning (ML) are affecting every aspect of production from storytelling to visual effects.

A shorter overview of the material is over at the Frame.io blog, as Here to Help: Machine Learning, AI, and the Future of Video Creation, which is an excellent resource. For the full story, keep reading.

Over recent years we’ve seen headlines that claimAI “edited a movie trailer,” cut narrative content in different styles, “makes a movie,” and many more like it, that has to have us all wondering if we’re going to be replaced. It doesn’t help when Wired magazine claims Robots Will Take Jobs From Men, the Young, and Minorities.

Machine Learning (ML) – where AI’s rubber hits the road – is creating a new crop of super-smart assistants we can use to amplify our personal creativity. It’s already providing (in at least some NLEs) Improved retiming, facial recognition, color matching, color balance, colorizing, visual and audio noise reduction, and upscaling.

I’ve been writing about the affect of AI on the production industries since my first post in July 2010 with Letting the Machines Decide. That was the first time I wondered what affect the burgeoning field of AI might have on creative production. It remains a strong interest, because the more of the routine we can take out of the production cycle – by whatever means we can without compromising the results – the more time we can spend on the creative parts of he job. In that article I revealed my bias:

So, with that background and a belief that a lot of editing is not overtly creative (not you of course dear reader, your work is supremely creative, but those other folk, not so much!). It can be somewhat repetitive with a lot of similarities.

In 2017 I wrote a series of articles on Artificial Intelligence and Production: an overview, then in part two the 2017 “now,” and in part three my expectations of the future. I wrote a lot more on AI on that blog. Search for “Artificial Intelligence” for the full list. In that article I concluded:

Change is inevitable. Our response to it is where we have control. We can ignore or fight-off the incursion of AI and ML into our world, or we can embrace the increase in productivity and the how we can focus on the truly creative – imaginative & original – parts of what we do.

In the relatively short four years since I wrote those articles, the field has exploded. My personal interest in the field overlaps with my professional goal to free as much time for creative work as possible. We’ve created a lot of software tools (Intelligent Assistance Software, Lumberjack System) and use some Machine Learning tools in some of them.

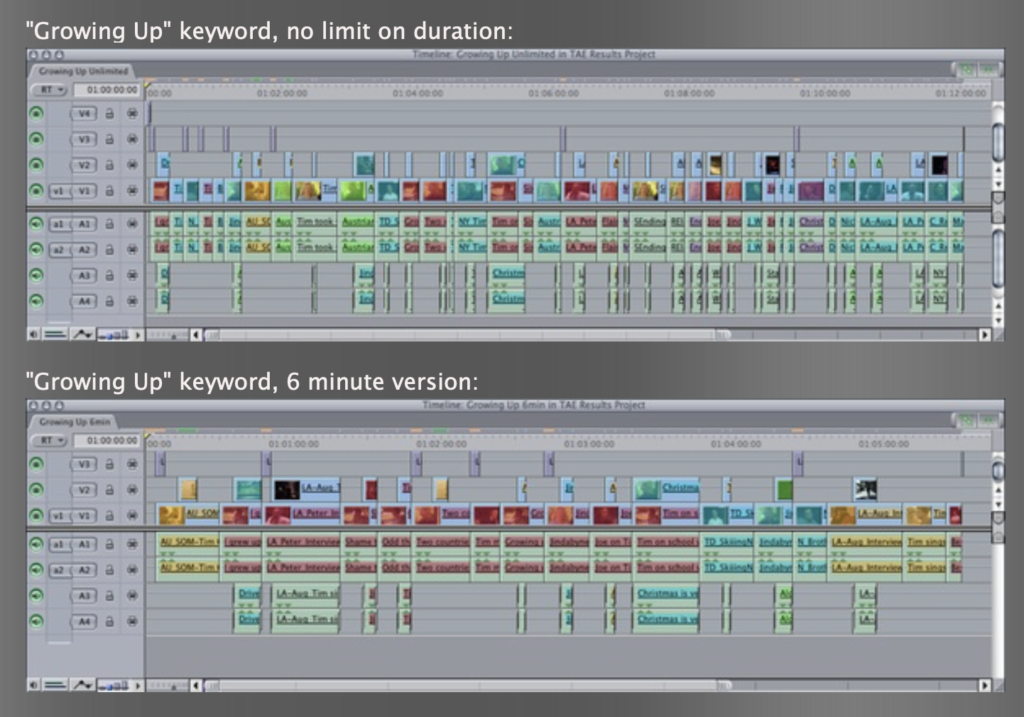

We’ve even been “accused” of creating an AI editing tool in First Cuts for FCP back in 2006. First Cuts did a very good job of an automated first string-out with story arc, appropriate b-roll and even lower thirds.It seemed like magic!

It was a technical triumph and a business failure! The perception was that entering the metadata took more time than was saved with the automated tool, totally overlooking the creative inspiration that comes by being able to explore five different string-outs in as long as it takes to play them.

The need to enter metadata was its Achilles’ heel, so I assumed that we would have useful metadata automatically derived from the media, to feed into the First Cuts algorithm. I saw as a way of creating the perfect metadata tool. As we’ll read in the section on Amplifying Metadata, ten years later and we’re no closer!

We are reaching the tipping point where AI, or more accurately Machine Learning, is already in some of the tools we use every day and in new tools available now, or coming up fast enough to affect yours and my future careers.

The biggest winners from technology developments over the last 20-30 year have been individual creatives and small teams. For example, the DV format empowered thousands of creative people who had previously been held back by the cost of conventional (at the time) production crews.

As an example. I connected with Kay Stammers shortly after the release of FCP 1 via 2-pop.com. Kay, and her husband Tristan, ran a small independent production company, that had been limited by the cost of quality production at that time. Adopting FCP and DV cameras they amplified their ability to produce their stories many fold.

AI and ML is the same. ML based Smart Assistants will amplify the ability of individual creative teams to amp up their output. It’s like a super-sized version of my goals. If you embrace it, you’ll become an Amplified Creative multiplying your output and/or upping your quality.

The headlines I referred to at the start of the article are designed to sell the story, so it’s not surprising that most are simply not true. At best exaggerations. No machine edited a trailer, or created a movie.

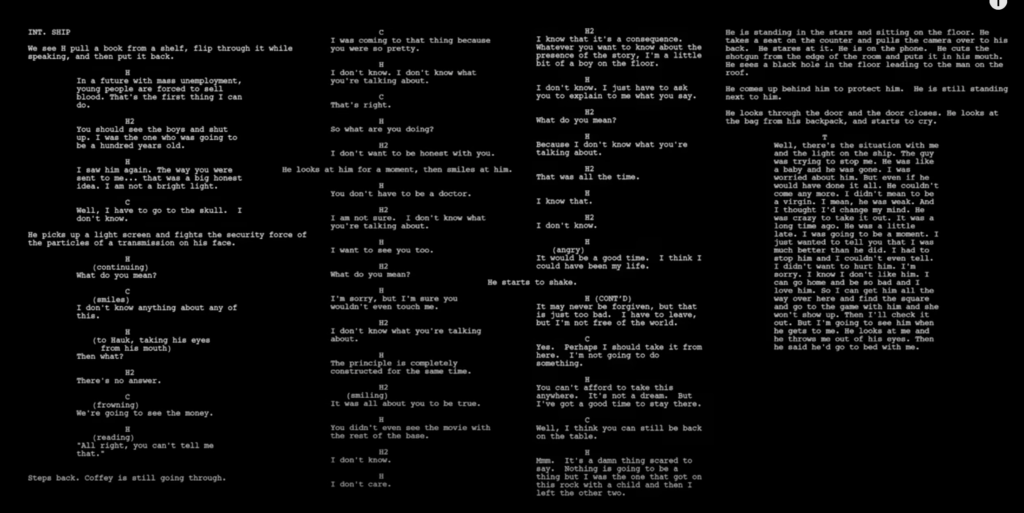

ML has been used to write scripts, to laughable results. While tools like Magisto create seemingly magic results, they are essential smart templates with some good Machine Learning models* that determine which parts of a shot to choose. More later.

*The result of training a Machine Learning tool, is to create a Model. The Model then continues to do the job it was trained to do. Models learn and evolve over time.

Even though the headlines are misleading, what is developing is exciting. Machine Models have pulled selects, extracted metadata and performed dozens of smart assistant tasks like color grading, automatically ducking under dialog, converting speech to text, and dozens of other tasks as you’ll read in this article, right through to synthetic humans.

It’s true that ML driven assistants will change the employment landscape, but that is the way it has always been. The Wired article I linked to at the start, does feel that more jobs will be created as some are eliminated. Technology has always been a fundamental part of visual storytelling from the Lumière Brothers onward and the staffing needs have always trended down. Filmmaking Magazine tells us:

Machines have aided and enabled filmmaking since the advent of the camera, followed shortly thereafter by innovations in sound. Later, computers would disrupt the entire process, from preproduction through post and onward to distribution and discovery. Today, a new wave of intelligent machines waits in the wings, ready to dramatically transform how stories are told and ultimately sold.

“I’ve thought a lot about the invention of photography — before that, creating photorealistic images required talent and training. Then, the camera came along. It didn’t make painting irrelevant. The camera set painting free. One of the things we’re doing is setting writing free.”

The natural response to “all of this” is to confirm that “no computer can do my job.” You’d be completely right…until the day you are not. While it’s the rare job that will be completely replaced by ML based tools, there are many time-consuming tasks within the creative process that can be improved or streamlined by ML, just as there have been technological improvements in the past that have improved or streamlined by technology. Who off-lines tape-to-tape any more!

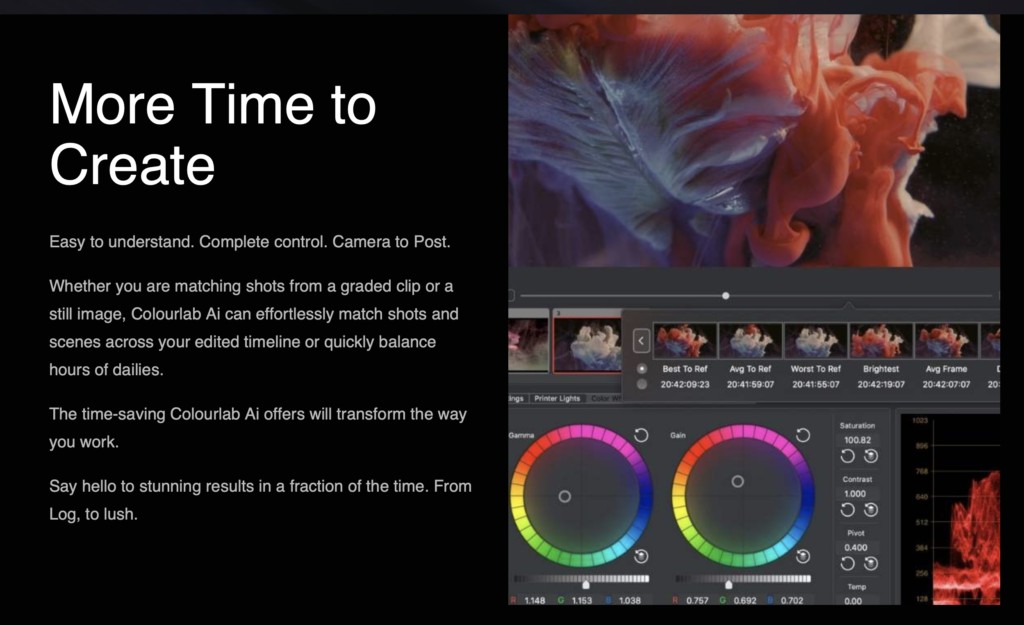

The many non-creative parts of the process get in the way of creativity and these smart tools are going to free us to be Amplified Creatives. Freed of the boring, Amplified Creatives have more time to be creative. For example, production tasks like Color Grading will be simplified with smart grading software like Colorlab.ai, or Adobe’s Sensei color matching grading in Premiere Pro.

Machine Learning has certainly been very good for the audio and visual processing side of the business, providing some very exciting tools and workflows.

It has also become very good at extracting all kinds of metadata from footage: text from signage, content descriptions, brand recognition, famous people, etc, and, not least, speech to text and semantic extraction have improved to the point of usefulness. That’s very good for media libraries where you don’t know what you’ll need to search on until the time comes. In Media Asset Management more metadata is a good thing. It is less useful extracting organizational metadata needed for documentary and reality productions.

While ML has become good at extracting keywords, it’s still terrible at providing useful keywords. Keywords relevant to the needs of the production, not generic metadata. I’ll explore in more detail in the section on Amplifying Metadata.

The danger of ignoring technology and workflow shifts, is that others will take the Augmented Creative approach, and embrace every smart assistant they can to enhance their creativity and productivity. Those that don’t will appear unproductive.

Before I discuss why the Amplified Creative approach is the right one, what are AI and ML?

What is Artificial Intelligence and Machine Learning

Let’s be clear. Right now “Artificial Intelligence” doesn’t exist. Autonomous Artificial General Intelligence – that a ‘machine’ could successfully perform any intellectual task that a human being can – worries the heck out of me ever since I read Tim Urban’s two part article The AI Revolution: The Road to Superintelligence.

We’re not there yet. We may never create a true AI AlphaGo, Sophia, self driving cars, and to a lesser extend personal assistants like Alexa, Siri, et al. are as close as we get to Artificial General Intelligence, and they are not close!

You will find a more detailed description of the distinctions between AI and ML at Pegasus One.

What we do have are incredible advances in Machine Learning, where a ‘machine’ (technically a bunch of Neural Networks, but we don’t really care) is typically trained on a very large number of graded examples, and then “learns” what it is supposed to learn. (There are other ML approaches, and I’ll mention them later.)

For example: Stanford researchers have trained one of Google’s deep neural networks to recognize skin lesions in photographs. By the end of training, the neural network was competitive with dermatologists when it came to diagnosing cancers using images. While still not perfect, it’s an impressive result. It’s not the job of a dermatologist, but it’s a valuable tool for maximizing the productivity of the dermatologist. The Machine will do the pre-scan and anything slightly abnormal is referred to the dermatologist for final diagnosis. An Amplified dermatologist, if I may.

The ML approach compares to traditional apps by learning rather than being programmed. Although people think that First Cuts for FCP was some sort of ML or AI, it was most certainly not. It quite cleverly modeled and embedded version of my approach to editing interviews into stories via classic programming style.

While we’ll see ML doing many, many exciting things (here’s my four year old list) I don’t see it even reaching the capability of First Cuts in my lifetime. Better extraction of metadata to drive that programmed algorithm for sure, because I – along with a lot of other people – believe the future of Machine learning in Post Production is symbiotic: the Amplified Creative concept.

Working in conjunction, individual creative storytellers will have more tools for finishing to a high standard with help from their smart assistants. Adobe agrees: in a 2018 blog post Creativity in the Age of Machines: How AI-Powered Creatives Will Enable a More Beautiful World Gavin Miller writes:

“So, as AI enables these things to become more spontaneous, we’ll have a larger army of people developing creative work and there will be more demand.”

I’m not entirely convinced that demand will grow that dramatically, but then I’m not of a generation making videos for their circle of friends.

Amplified Creatives

For the current part of my career, my business goal is to “take the boring out of post,” which is why I’m so bullish on ML in production. Our goal has always been to automate the boring or repetitive parts of post, so that creative people can focus on being creative. The next generation of smart assistants will process most of the repetitive work so more time can be spent on storytelling and the look and feel of the finished product.

Tools are not competition. In an article at PolSci.com summarizing a World Economic Panel on AI, titled The Future won’t be “Man or Machine”, it will be SymbioticIBM CEO Ginni Rometty — member of a five-person panel in Davos offering their views of AI — said that technology should augment human intelligence.

“For most of our businesses and companies, it will not be ‘man or machine,’” Rometty said. “…It’s a very symbiotic relationship. Our purpose is to augment and really be in service of what humans do.”

Science Direct’s paperArtificial intelligence and the future of work: Human-AI symbiosis in organizational decision making (only the overview is available) that postulates:

“With a greater computational information processing capacity and an analytical approach, AI can extend humans’ cognition when addressing complexity, whereas humans can still offer a more holistic, intuitive approach in dealing with uncertainty and equivocality in organizational decision making.”

This is a best of both worlds approach. The best of what humans will continue to bring to the table, with ever smarter assistants filling in. Machine Learning is good at a lot of things, but since it is effectively “rote learning” it’s hard to imagine a spark of complete originality arising. Humans, particularly when freed of repetitive drudgery, are very good at original thinking.

In a blog post “Are Centaurs Real Pt 1” Tom Wooton of Red Bee media introduces horsepower/manpower centaur:

“Well, very roughly and with many variations and nuances, it’s the idea that although automation – increasingly that automation facilitated by AI and machine learning – can do many tasks previously carried out by people much better than people can, automation plus people providing cognitive skills at the right point will outdo pure automation by itself. That’s your centaur – part horsepower part personpower.”

He quotes Chess as an example. Computers now beat grandmasters, but a grandmaster + computer beats computers. (Until it reaches the point where every game ends in a draw, which then takes the human benefit out of the equation). According to Wooton:

“In this model, humans are seen to add value to the process of automation. They perform a role of bringing expertise, experience, imagination and insight to prioritise and pick from the heavy processing of large amounts of data and algorithmic pattern-finding. In this model, Kahneman’s System 2 thinking brings value to big data crunching. In the knowledge economy especially, humans only bring value when placed at the right point in the automation chain.”

As creatives, we have to constantly adapt our tools. Digital Non-linear editing was so much more efficient than tape-to-tape offline, even when both still needed a high end edit bay to conform in!

From an era of 40 lb “portable” standard definition cameras and recorders, to a pocketable device that takes at least eight times higher resolution with better color fidelity, in frame rates unheard of at the time. That same device runs NLE software – LumaFusion – to edit, title, grade, add effects, encode and publish to streaming platforms with little set up or effort.

Other apps on that same device can switch multiple cameras and live stream them to the Internet. Even 20 years ago that was a truck and a couple of hours setup, and an expensive uplink. Now your ‘truck’ also doubles as your phone!

The tools evolve, and smart creatives take advantage of the way the symbiotic model frees them to spend more time on the “personpower” that Wooton described. These digital tools will enable individual creatives to produce high quality finished stories.

In Gavin Miller’s 2018 post on Adobe’s blog that I referred to earlier – Creativity in the Age of Machines: How AI Powered Creatives will Enable a More Beautiful World, after warning about the potential for abusing these technologies he concludes:

When everyone can attain a certain standard of visual quality, it will force those who are truly gifted to set a new standard of creativity and originality. And I think the world will be even more beautiful and entertaining as a result.

Now in 2021 there are a wide range of ML based tools to use across the whole spread of production: from indicating which scripts are likely to succeed, to micro targeting audiences, to digital humans, to AI color grading, and so, so much more, that are available now.

I’ll discuss some of the specific smart assistants that are available now, or soon will be across the entire production process, that will turn you into an Amplified Creative.

Not all production is “Hollywood” and the vast majority of production that’s outside “Film and TV” will benefit from amplified production. Solo producers and small groups will benefit most from the smart camera mounts (and software), autonomous drone camera platforms, and automatic switching tools.

Let’s take a look at how ML is now, or soon soon will, affecting each area of production in turn.

Introduction, AI and ML, Amplified Creativity

- Introduction and a little history

- What is Artificial Intelligence and Machine Learning

- Amplified Creatives

- Can machines tell stories? Some attempts

- Car Commercial

- Science Fiction

- Music Video

- The Black Box – the ultimate storyteller

- Documentary Production

- Greenlighting

- Storyboarding

- Breakdowns and Budgeting

- Voice Casting

- Automated Action tracking and camera switching

- Smart Camera Mounts

- Autonomous Drones

Amplifying Audio Post Production

- Automated Mixing

- Synthetic Voiceovers

- Voice Cloning

- Music Composition

Amplifying Visual Processing and Effects

- Noise Reduction, Upscaling, Frame Rate Conversion and Colorization

- Intelligent Reframing

- Rotoscoping and Image Fill

- Aging, De-aging and Digital Makeup

- ML Color Grading

- Multilingual Newsreaders

- Translating and Dubbing Lip Sync

- Deep Fakes

- Digital Humans and Beyond

- Generating images from nothing

- Predicting and generating between first and last frames.

- Using AI to generate 3D holograms

- Visual Search

- Natural Language Processing

- Transcription

- Keyword Extraction

- Working with Transcripts

- We already have automated editing

- Magisto, Memories, Wibbitz, etc

- Templatorization

- Automated Sports Highlight Reels

- The obstacles to automating creative editing

Amplifying your own Creativity

- Change is hard – it is the most adaptable that survive

- Experience and practice new technologies

- Keep your brain young