Anyone who’;s been in the production industries for any length of time has already navigated many technology changes: from analog to digital, SD to HD, SDR to HDR and so on. Avid Media Composer was first out the digital Non-Linear Editing gate, and pretty much every other interface was inspired by the design decisions made there, until Final Cut Pro X rethought some of those assumptions.

Now there is a whole new generation of editing tools that take very different approaches to video storytelling: from RunwayML, through Builder NLE, Reduct, Synthesis.io, to whatever Adobe’s Project Blink evolves into, that take quite different approaches to solving the problem of organizing media into compelling stories.

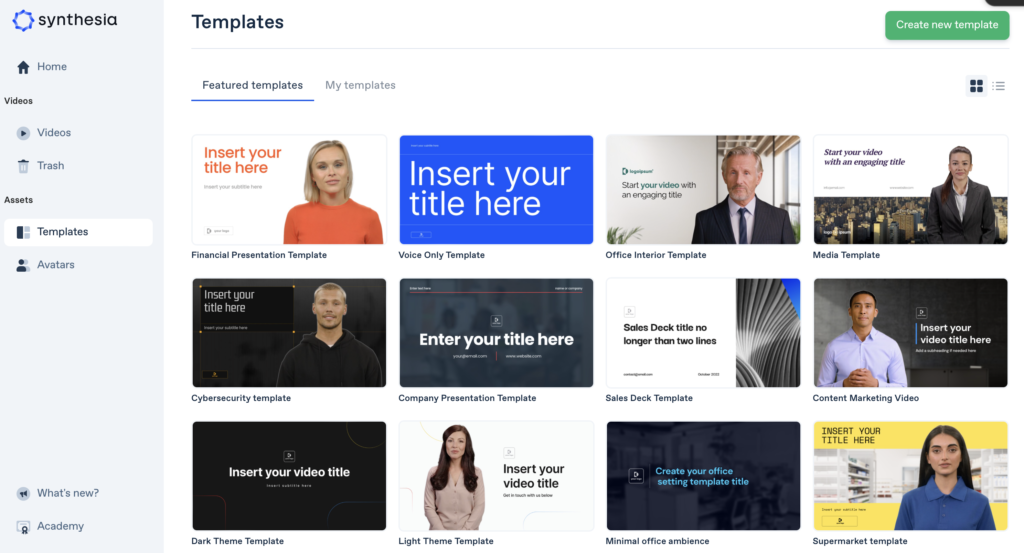

PowerPoint to Video with Synthetic Avatars

Synthesia’s focus is more on using their presenter avatars in the corporate market, but they are building out their editing tools with the intent of it becoming a one-shot “create and edit” solution. It’s corporate bias is obvious from the extensive tools for repurposing PowerPoint presentations.

Your Avatar is driven by a text panel, and you can composite stills and video into the slides. We use Synthesis for some of Lumberjack System’s training and promotional videos. The second generation avatars avoid the few pitfalls of the earlier generation, and are quite believable – in small amounts.

Edit Video using Text

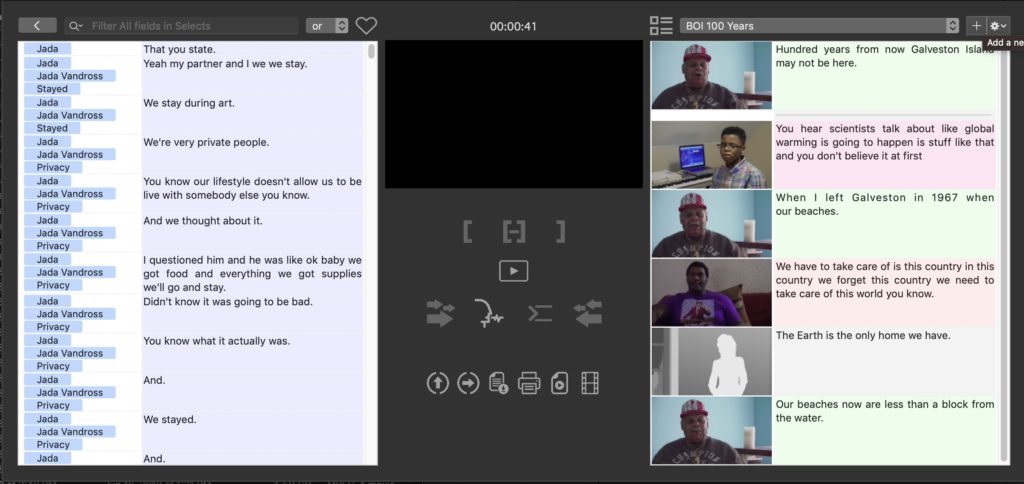

Builder NLE, Reduct, Descript and, to a very limited degree, Premiere Pro all use text to edit video in some way. While Adobe seem to feel like they’ve invented editing video with text, that honor goes to Dr Gregory Clarke and myself with our 2010 app prEdit. Being released just before FCP X was announced, it quickly sank into oblivion to be resurrected as Lumberjack Builder NLE in August of 2018, when most of the other companies in the field were being founded.

Other than Builder and Reduct, transcript based “editing” tools can edit only one transcript at a time, which makes them only suited for simple cut-down edits, which is where Descript has it’s starring moment. The transcript editing tools in the current Premiere Pro Beta of the 2023 release includes rudimentary tools for editing video with text, there is no way to review the story you’re creating in text form.

Builder NLE and Reduct are the most complete, allow editing from unlimited transcript into one story, and are the only services to support multicam in both Premiere Pro and Final Cut Por. While Builder NLE is a much more complete text driven editing experience there is some overlap. Builder NLE doesn’t require uploading to the service (as do Reduct, Descript, and Simon Says Assemble), so is much more suited for documentary and reality projects. Uploading 20-100 hours of material is going to delay the start of collaboration! Reduct is strong on collaboration (being online) while Builder NLE is stronger for serious story building.

In a Sneak Peak at Adobe Max 2022, they previewed Project Blink, which also uses and “editing with text” metaphors. Blink has the additional ability to search by visual within the image and by people. The technologies and metadata guru in me is excited by this. Then the more practical brain kicked in, wondering what context – other than the cut down of a keynote presentation – would Blink be more useful than Builder NLE or Reduct. If you are focused on story, then it probably won’t come from visuals. If you’re focused on visuals, you’ve likely already edited the story.

As a funny aside, I couldn’t help observe that everything in the Project Blink demo would have been more easily done with a little real time keywording during the keynote!

The comparison chart I put together recently for Lumberjack System doesn’t include Blink as it’s still a technology preview.

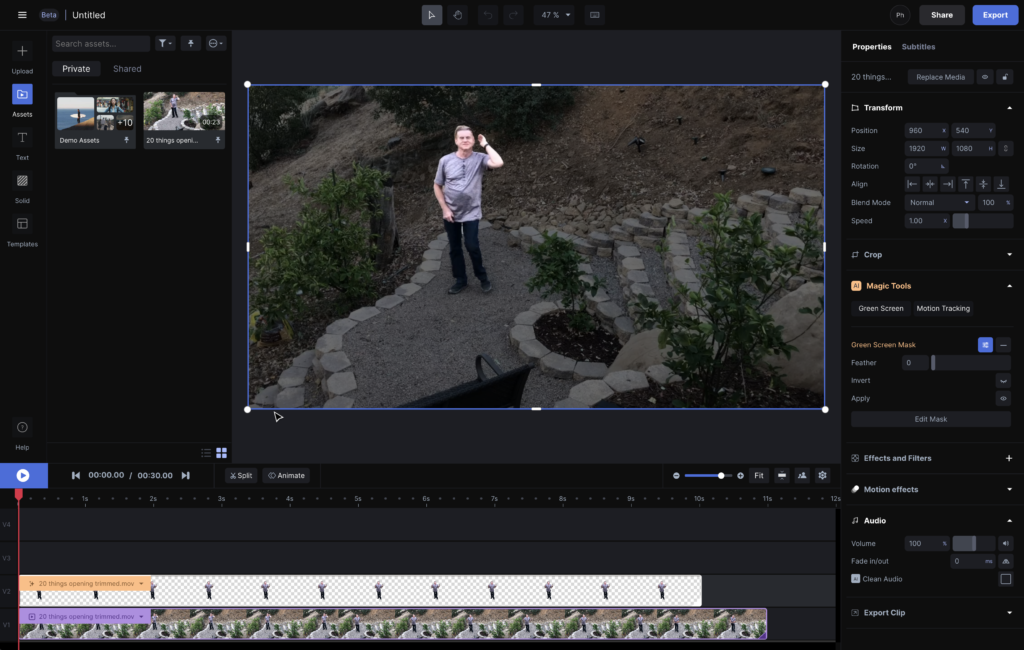

Text Prompted Video Editing

The most interesting, and one that I intend to spend time exploring with a (personal) music video project, is Runway ML: Text prompted editing. From the teaser video, it seems more focused on editing the image, rather than fine video trimming as you’d get in a mainstream NLE, but the ability to do instant keying of object, object removal, applying looks and generate images from text prompts, clean audio, generate transcripts, et. al. interests me a lot. The delight of getting a decent key with one click on an object, not the background, cannot be downplayed. A lot of Runway ML seems like magic, as do all sufficiently advanced technologies.

Runway ML, or it’s technological children and grandchildren, are the future direction of editing in general. Perhaps not the very conservative feature film and television editing worlds, but in the world of general creativity with video, it will be killer because it will be like having a very smart assistant there to help with the grunt work. As my recent career has been devoted to taking grunt work out of post, it’s quite obvious why I’m so positive about this technological direction.

I plan on using RunwayML on a personal music video project in the near future.

2 replies on “Editing Tools are Evolving – Again!”

Great look at what’s right around the corner. The “very conservative world” needs to get more efficient. My take is that Runway ML, as it matures, will supplant the human Assistant Editor. The role will not completely disappear, but it will cease to be a lucrative career.

Similarly, I can envision DIT ML. The time a director or Production Manager spends defining the output of the DIT can be spent teaching software.

Agreed that ML will take on many of the assistant roles.