The magic of our industry has always been to create something that isn’t real, out of some real, and some created, elements. Well, imagine if those elements were just a bunch of pixels, then you have a grasp of where this is going: creating video out of a description alone.

Another necessity for Mike Pearl’s ‘Black Box’ it would be a great tool even for educational and corporate production. Instead of Alton Brown needing elaborate props and sets to illustrate the inner workings of culinary concoctions, simply describe the example you want, and your Black Box will create it for you. In full 8K HDR!

Okay, we are nowhere near that, yet, but the research is surprisingly advanced. Keeping in mind how much advancement we’ve seen over two years in other examples, like Jukebox between 2014 and 2016: from 80’s video game to senior living commercial backing track!

These may be research projects now, but I expect them to be very exciting when I update this article in 2023!

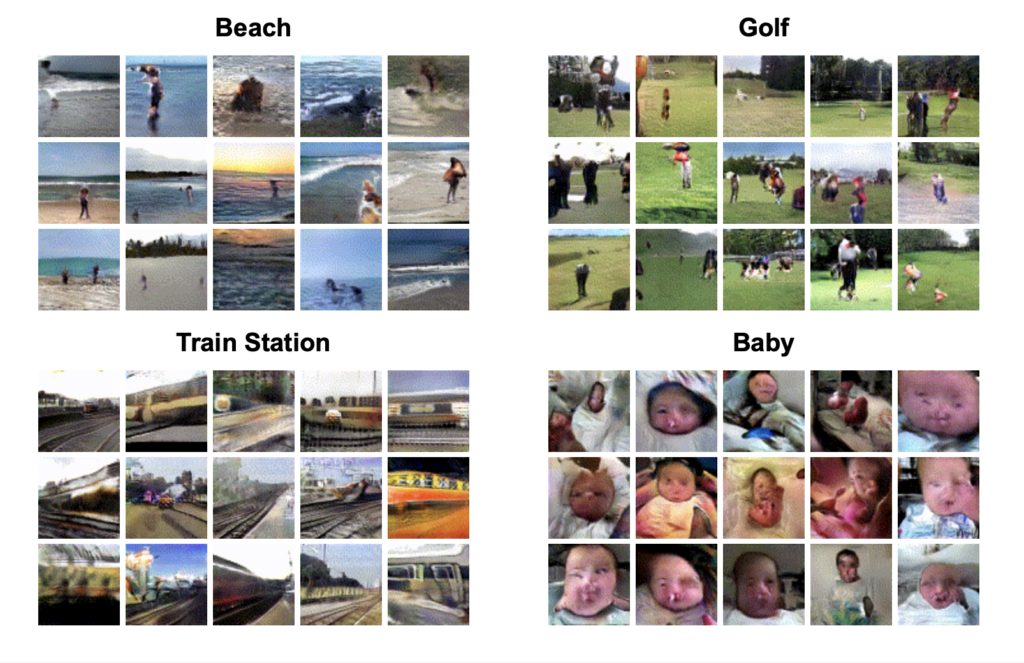

Let’s start back in 2016 when AI researchers at MIT used a GAN similar what we talked about in the Digital Human section. They pitted a Neural Network generating video based on a still frame against another Neural Network trained to pick fake video, with a feedback loop between them.

In the video linked above, you can see (tiny) examples of the input frame, and the next 1-2 seconds of video, as the Neural Network expects it to be, that is good enough to fool the fake video detector Neural Network! They don’t quite pass the human eye, yet, but the thing to remember is that these technologies iterate extremely fast.

Google have been busy, with a project from 2019 that can “create videos from start and end frames only” of very simple action, like a person walking across a simple background. According to the article:

The most impressive video clip happened to be one in which the AI system generated the next one second of crashing waves in the ocean.

According to another article at Venture Beat a team at Google Research have made progress with:

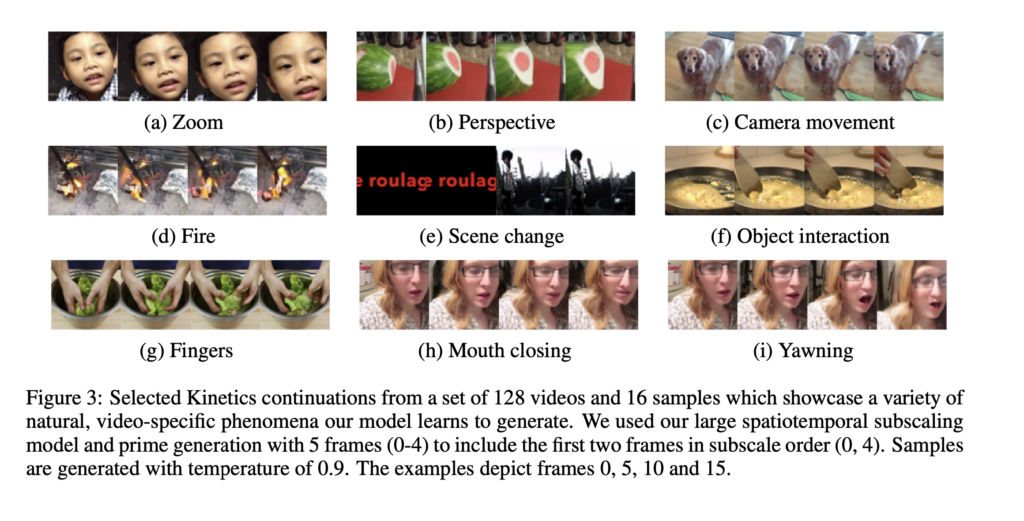

…novel networks that are able to produce “diverse” and “surprisingly realistic” frames from open source video data sets at scale. They describe their method in a newly published paper on the preprint server Arxiv.org (“Scaling Autoregressive Video Models“), and on a webpage containing selected samples of the model’s outputs.

“[We] find that our [AI] models are capable of producing diverse and surprisingly realistic continuations on a subset of videos from Kinetics, a large scale action recognition data set of … videos exhibiting phenomena such as camera movement, complex object interactions, and diverse human movement,” wrote the coauthors. “To our knowledge, this is the first promising application of video-generation models to videos of this complexity.”

https://arxiv.org/pdf/1906.02634.pdf

Nobody is predicting fully finished scenes for that Black Box in the next few years, but when it does, the tools will be able to create scenes and background only limited by our ability to describe them.

I can imagine an interface like Metahumans’ where there are slides for hills, foliage, water, etc and they interact in real time, until that is supplanted by voice control: describe the scene, get the scene! Or, simply pluck it out of my brain.

Using AI to generate 3D holograms in real-time

Is a Holodeck program a production? When it comes to generating new pixels representing fully synthetic images, perhaps the distinction is unimportant. In May 2021 MIT published a paper:

A new method called tensor holography could enable the creation of holograms for virtual reality, 3D printing, medical imaging, and more — and it can run on a smartphone.

Technology that was expected to be more than “10 years out” looks like becoming a reality on smartphones in the future. Not quite the Holodeck, but pretty cool.

Introduction, AI and ML, Amplified Creativity

- Introduction and a little history

- What is Artificial Intelligence and Machine Learning

- Amplified Creatives

- Can machines tell stories? Some attempts

- Car Commercial

- Science Fiction

- Music Video

- The Black Box – the ultimate storyteller

- Documentary Production

- Greenlighting

- Storyboarding

- Breakdowns and Budgeting

- Voice Casting

- Automated Action tracking and camera switching

- Smart Camera Mounts

- Autonomous Drones

Amplifying Audio Post Production

- Automated Mixing

- Synthetic Voiceovers

- Voice Cloning

- Music Composition

Amplifying Visual Processing and Effects

- Noise Reduction, Upscaling, Frame Rate Conversion and Colorization

- Intelligent Reframing

- Rotoscoping and Image Fill

- Aging, De-aging and Digital Makeup

- ML Color Grading

- Multilingual Newsreaders

- Translating and Dubbing Lip Sync

- Deep Fakes

- Digital Humans and Beyond

Amplifying the Pixel

- Generating images from nothing

- Predicting and generating between first and last frames.

- Using AI to generate 3D holograms

- Visual Search

- Natural Language Processing

- Transcription

- Keyword Extraction

- Working with Transcripts

- We already have automated editing

- Magisto, Memories, Wibbitz, etc

- Templatorization

- Automated Sports Highlight Reels

- The obstacles to automating creative editing

Amplifying your own Creativity

- Change is hard – it is the most adaptable that survive

- Experience and practice new technologies

- Keep your brain young