Storytelling has always been about painting pictures – in people’s mind or on a screen somewhere. One of the significant challenges for visual storytellers is creating the people and places from the storyteller’s imagination, into a more tangible medium, like digital bits! Artificial Intelligence (AI) and Machine Learning (ML) are dramatically simplifying the way we create our visual illusions.

I discussed how AI and ML are changing visual processing in one part of my deep dive into AI/ML and Production, but this is a rapidly evolving field with new research (and tools) becoming available almost every day. We’re seeing dramatic improvements between research papers just months apart, each one leapfrogging the last in speed and quality of results.

Vintage Portraits

If you need some background portraits to inhabit your set, don’t go scrounging through Thrift Stores, simply create your own!

Neural Networks-enthusiast Doron Adler has managed to create a set of portraits that look like they were drawn in the 50’s era. The resulting pictures look extremely authentic and were generated by NVIDIA’s StyleGAN.

There’s a deeply technical explanation in the article, but the tools – NVIDIA’s StyleGAN – are available to everyone. You can learn more about NVIDIA’s StyleGAN here.

The images are generated from existing digital images, but if you don’t have suitable models or images, create one!

Generated Models

In addition to using a generated model for a seed for your vintage portraits, royalty free model photos generated to request can be used in visual storytelling, and advertising applications. Generated Photos gives you control over the creation of your facial image’s age, color, hair, etc.

I would be remiss if I didn’t also – once again – point to NVIDIAs Metahumans. Generated Photos creates flat digital images, while a Metahuman is a full 3D model animatable within Unreal Engine for true (human driven) digital actors.

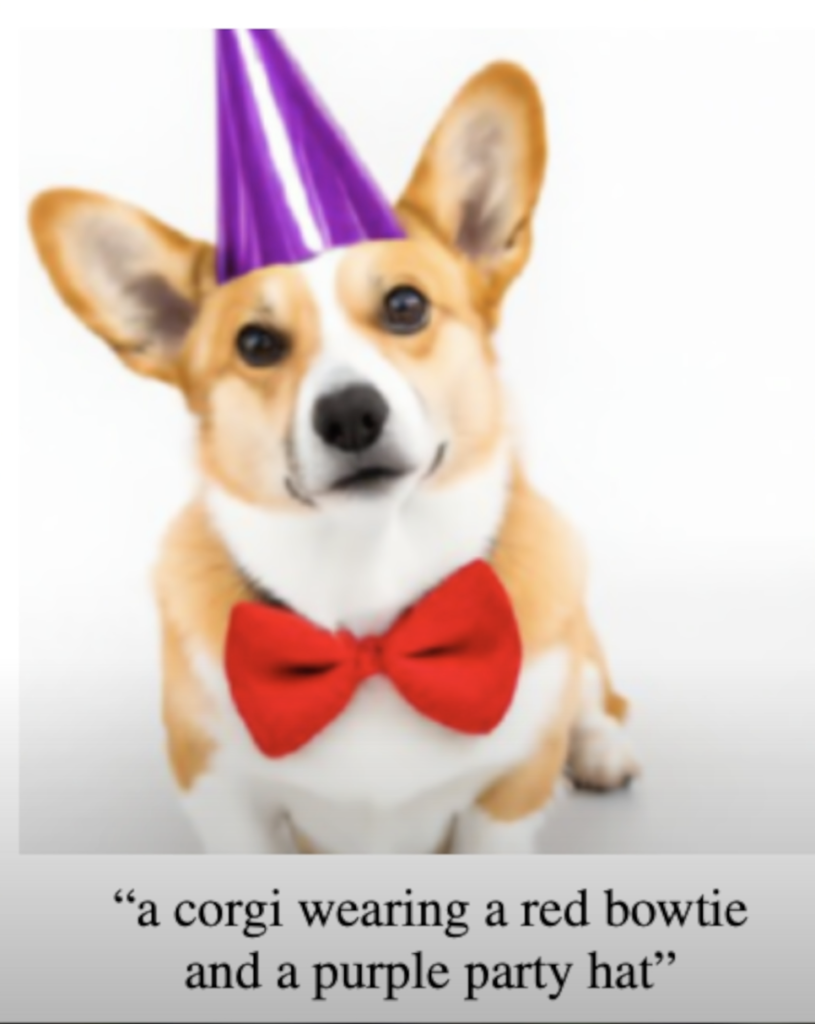

Synthetic Images on Request

Another “Two Minute Papers” presentation on Open AI GLIDE AI starts with a very good example of how quickly the field of synthetic images (and media in the case of 3D Metahumans and NVIDIA Canvas is evolving. This latest presentation shows how GLIDE AI will create images from a description. A very detailed description such as “a corgi wearing a red bogie and a purple party hat” will give you this image:

The AI understands geometry, art styles, lighting, and so much more. There are example where the requested image, is added to an existing photograph.

For now this is a research project, but imagine having this at your fingertips when you’re trying to find suitable b-roll. You could using Photoshop’s masking tools to separate the elements and animate in your favorite tool.

Extracting 3D from 2D Photos

NVIDIA’s interest in AI/ML is definitely commercial. There is an enormous amount of computational power required for many of these new tools, and NVIDIA is well positioned to supply that computation power. Another recent “Two Minute Paper” describe’s NVIDIAs revelation of a new way of deriving 3D models and scenes from flat 2D images. This requires extracting all the geometry and mapping the limited available pixel information on the new geometry. The results are freakily amazing.

This new approach is not only much more accurate it is an order of magnitude faster.

Faster, Easier Ray Tracing

Another company dedicating significant resources to researching AI/ML as it fits into the tools they provide for visual storytellers. A significant downside to 3D Ray Tracing (for accurate light modeling) is that it takes forever, so researchers are always looking for way to improve the speed of that rendering, without compromising the quality.

Two Minute Papers shows how Adobe’s “Tessellation-Free Displacement Mapping for Ray Tracing” approach brings high quality Ray Traced rendering in real time, with amazing detail. Traditionally, the more detailed the ray tracing, the longer each frame takes and exponentially more members is used as tessellation increases (the number of polygons used to render non-flat surface) time and memory required increases exponentially.

The heart of Adobe’s breakthrough is real time (on beefy NVIDIA hardware no doubt) and has no memory penalty. Faster, simple 3D environments of everyone!