Microsoft Silverlight Rough Cut Editor http://tinyurl.com/ydyzcy9

Just had this bought to my attention, and – to the best of my recollection – I’ve never heard of it before. Doesn’t seem to be a “live” project anymore. Anyone know anything more?

Microsoft Silverlight Rough Cut Editor http://tinyurl.com/ydyzcy9

Just had this bought to my attention, and – to the best of my recollection – I’ve never heard of it before. Doesn’t seem to be a “live” project anymore. Anyone know anything more?

If you can type, you can make movies…http://tinyurl.com/57y7b2

Simple avatars and typed text-to-sound does not make a “movie”. It makes something that’s mildly interesting but it’s not a “Movie”.

ABC’s Ingenious App Uses Sound to Sync iPad, TV http://bit.ly/c2n5In

ABC’s iPad app for the television show “My Generation†creates a seamless, two-screen, interactive television experience by bridging a cable/satellite connection and an iPad, two digital devices, by measuring decidedly analog sound waves using the iPad’s microphone. The app looks for certain contours in the audio signal that the Neilsen television ratings firm uses to monitor broadcasts, so that it knows when to display a particular poll or other item linking up with a precise moment in the show.

Though television companion apps exist for the iPad, this automatic syncing feature represents a big step forward. And while ABC is only rolling this out for a single program, it’s such a clever, obvious-in-retrospect idea — not to mention far easier than writing digital code to keep the devices synced wirelessly even if the user watches at someone else’s house or later, using on-demand or a DVR — that it could easily become widespread across many shows.

Android’s Google Translate, Conversation Mode – http://bit.ly/cainG7 effectively replicates Star Trek’s Universal Voice Translator.

Who could read that headline and not comment?

Mobile product director Hugo Barra demoed a forthcoming update to Android’s Google Translate, Conversation Mode, which effectively replicates Star Trek’s Universal Voice Translator– it uses the phone to facilitate a two-way conversation between people speaking in different languages.

Personally, I’m hanging out for the Halodeck.

Â

Â

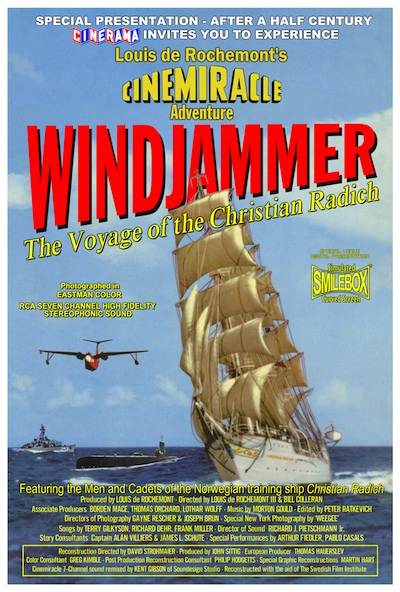

Was at the Cinerama Dome to view the restored print of Windjammer and it occurred to me that there’s a lot of commonality between Cinerama (the three camera/three projector widescreen of the late 50’s and early 60’s) and 3D.

But first, a little back story. I have been consulting on the restoration of Windjammer as a technical consultant: making sure that the maximum amount of quality we could get from the print was available for the restoration.

I also advised on tools for the job. The Foundry’s Furnace Core featured prominently as did Adobe After Effects and Final Cut Pro. I also helped set workflow and kept everything running smoothly.

Unfortunately the complete negatives for the three panels of Windjammer are not complete. In fact the only place the entire movie is available was in a badly faded composite 35mm Anamorphic print.

You can see the trailer, remastering process and how we telecined (Oh look, it’s me in the telecine bay) online, but today was the only time it’s likely to be shown in a Cinerama Theater.

David Strohmaier and Greg Kimble did a great job on the restoration – all on Macs with Final Cut Pro and After Effects.

Now this wasn’t a full reconstruction so we worked in HD – 1080p24 – but used the full height during telecine and correction so we didn’t waste any signal area with black. For the DVD, due in early 2011, the aspect ratio is corrected and a “smile box” (TM) treatment to simulate the surround nature of Cinerama.

Because we were working in HD, I was pleasantly impressed by how great it looked at Cinerama size on the Arclight Theater’s Dome Cinema in Hollywood. (Trivia point: the Dome was built for Cinerama it never showed Cinerama until this decade.)

Another point of interest was that the whole show ran off an AJA KiPro as it did in Bradford earlier in the year, and Europe last month. Each Act of the 140+ minute show was contained on one drive pack. Can’t recommend the KiPro highly enough.

So, there we were enjoying the story (and restoration work) and it occurred to me that there were strong similarities in cinematic style between “made for 3D” 3D and Cinerama.

Â

Â

Cinerama seams together three projectors into a very wide screen view that was the precursor of modern widescreen. The very wide lens angles favor the big, panoramic shots and shots that are held rather than rapid cutting. Within this frame the viewer’s eyes are free to wander across multiple areas of interest within the frame.

Similarly, my experience of “made for 3D” 3D movies is that it is most successful when shots are held a little bit longer because each time a 3D movie makes a cut, it takes the audience out of the action for a moment while we re-orient ourselves in space. (Unfortunately there’s nothing analogous to that in the Human Visual System, unlike traditional 2D cutting, which mimics the Human Visual System – eyes and brain together .)

Both Cinerama and 3D work best (in my humble opinion) when the action is allowed to unfold within the frame, rather than the more fluid camera of less grand 2D formats or 3D.

Since 3D had its last heyday around the same time as Cinerama, maybe everything old is new again? Digital Cinerama anyone? (How will we sync three KiPros?)

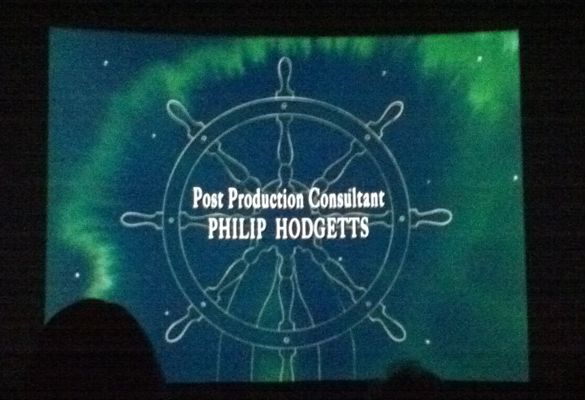

And one little vanity shot since today was the first (and likely last) time I’ve had my credit up on the big screen in a real cinema:

Â

Â

Â

I probably have mentioned that we’re working on a documentary about Bob Muravez/Floyd Lippencotte Jnr in part because we wanted demo footage we “owned” (so we could make tutorials available down the line) but also because I wanted to try it in action on a practical job.

I start work in prEdit shortly – nearly started today, so it looks like Friday now – but already we discovered some ideas that have now been implemented in the 1.1. release.

Along the way I’ve learnt a lot about how well (or not) Adobe’s Speech Analysis works. (Short answer: it can be very, very good, or it can be pretty disappointing.) As prEdit is really designed to be used with transcriptions I also tested the Adobe Story > OnLocation > Premiere method, which always worked.

Well, from that workflow it became obvious that speakers (Interviewer, Subject) were correctly identified so wouldn’t it be nice if prEdit automatically subclipped on speaker changes. And now it does.

If multiple speakers have been identified in a text transcript, prEdit will create a new subclip on import at each change of speaker

It also became obvious as I was planning my workflow that we needed a way to add new material to an existing prEdit project, and to be able to access the work from historic projects to add to a new one.

New Copy Clips… button so you can copy clips from one prEdit project to another open project

Now that I’m dealing with longer interviews than when we tested, I needed search in the Transcript view.

Search popup menu added to Transcript View.

That led to one problem: adding metadata to multiple subclips at a time. Previously I’d advocated adding common metadata to the clip before subclipping in prEdit (by simply adding returns to the transcript) but if it comes in already split into speakers, that wasn’t going to work!

Logging Information and Comments can be added for multiple selected subclips if the field doesn’t already have an entry for any of the selected subclips

Because you never, ever want to run the risk of over-writing work ready done.

And some nice enhancements:

Faster creation of thumbnails

Bugfix for Good checkbox in Story View

prEdit 1.1 is now available. Check for updates from within the App itself. And if you work in documentary, you should have checked it out already.

Comutational Photography? http://gizmo.do/d9DyaV Some new cool idea from Adobe. Nice concept piece (not software announcement)

Re-adjust focus in post. Nice technology. Watch the video.

‘Interoperable Master Format’ Aims to Take Industry Into a File-based World http://bit.ly/bvF6Vk

A group working under the guidance of the Entertainment Technology Center at USC is trying to create specifications for an interoperable set of master files and associated metadata. This will help interchange and automate downstream distribution based on metadata carried in the file. The first draft of the specification is now done based on (no surprises) the MXF wrapper. (Not good for FCP fans, as Apple has no native support for MXF, without third party help).

Main new items: dynamic metadata for hinting pan-scan downstream and “Output Profile List”:

“The IMF is the source if you will, and the OPL would be an XML script that would tell a transcoder or any other downstream device how to set up for what an output is on the other side,†Lukk explained.

The intention is to bring this draft spec to SMPTE, but first, ETC@ USC is urging the industry to get involved. “We need industry feedback and input on the work that the group has done thus far,†said ETC CEO and executive director David Wertheimer. “Anyone who has interest in this topic should download the draft specification and provide feedback to the group.â€

RT @iDiyas: Apple patent opens new frontier for gaming-documenting http://bit.ly/a7si9G

I’m not even sure why this interests me, but it does. Taking “action snaps” from your game at crucial moments and making them into a cartoon. Now Apple patents a lot of ideas that don’t make it into products, so this may never come to anything, but it is interesting.

Imagine an Enhanced Reality game – where extra elements are overlaid a live camera view – and getting a comic record of the adventure.

The Future of Picture Editing http://bit.ly/aNRLVA

I’ve had the pleasure of meeting Zak Ray when I travelled to Boston. I like people who have an original take on things and Zak’s approach to picture editing – and his tying it to existing technologies (that may ned improvement) – is an interesting one.

And yet, despite such modern wonders as Avid Media Access and the Mercury Playback Engine, modern NLEs remain fundamentally unchanged from their decades-old origins. You find your clip in a browser, trim it to the desired length, and edit it into a timeline, all with a combination of keys and mouse (or, if you prefer, a pen tablet). But is this process really as physically intuitive as it could be? Is it really an integrable body part in the mind’s eye, allowing the editor to work the way he thinks? Though I can only speak for myself, with my limited years of editing experience, I believe the answer is a resounding “noâ€. In his now famous lecture-turned-essay In the Blink of an Eye, Walter Murch postulates that in a far-flung future, filmmakers might have the ability to “think†their movies into existence: a “black box†that reads one’s brainwaves and generates the resulting photo-realistic film. I think the science community agrees that such a technology is a long way off. But what untilthen? What I intend to outline here is my thoughts on just that; a delineation of my own ideal picture-editing tools, based on technologies that either currently exist, or are on the drawing board, and which could be implemented in the manner I’d like them to be. Of course, the industry didn’t get from the one-task, one-purpose Moviola to the 2,000 page user manual for Final Cut Pro for no reason. What I’m proposing is not a replacement for these applications as a whole, just the basic cutting process; a chance for the editor to work with the simplicity and natural intuitiveness that film editors once knew, and with the efficiency and potential that modern technology offers.

It’s a good article and a good read. Raises the question though – if Apple (or Adobe/Avid) really innovated the interface would people “hate it” because it was “different”?